+

+  +

+

+ + +

+ +[Ultralytics](https://ultralytics.com) [YOLOv8](https://github.com/ultralytics/ultralytics) is a cutting-edge, state-of-the-art (SOTA) model that builds upon the success of previous YOLO versions and introduces new features and improvements to further boost performance and flexibility. YOLOv8 is designed to be fast, accurate, and easy to use, making it an excellent choice for a wide range of object detection and tracking, instance segmentation, image classification and pose estimation tasks. + +We hope that the resources here will help you get the most out of YOLOv8. Please browse the YOLOv8 Docs for details, raise an issue on GitHub for support, and join our Discord community for questions and discussions! + +To request an Enterprise License please complete the form at [Ultralytics Licensing](https://ultralytics.com/license). + +

+

+

+

+

+

+Install

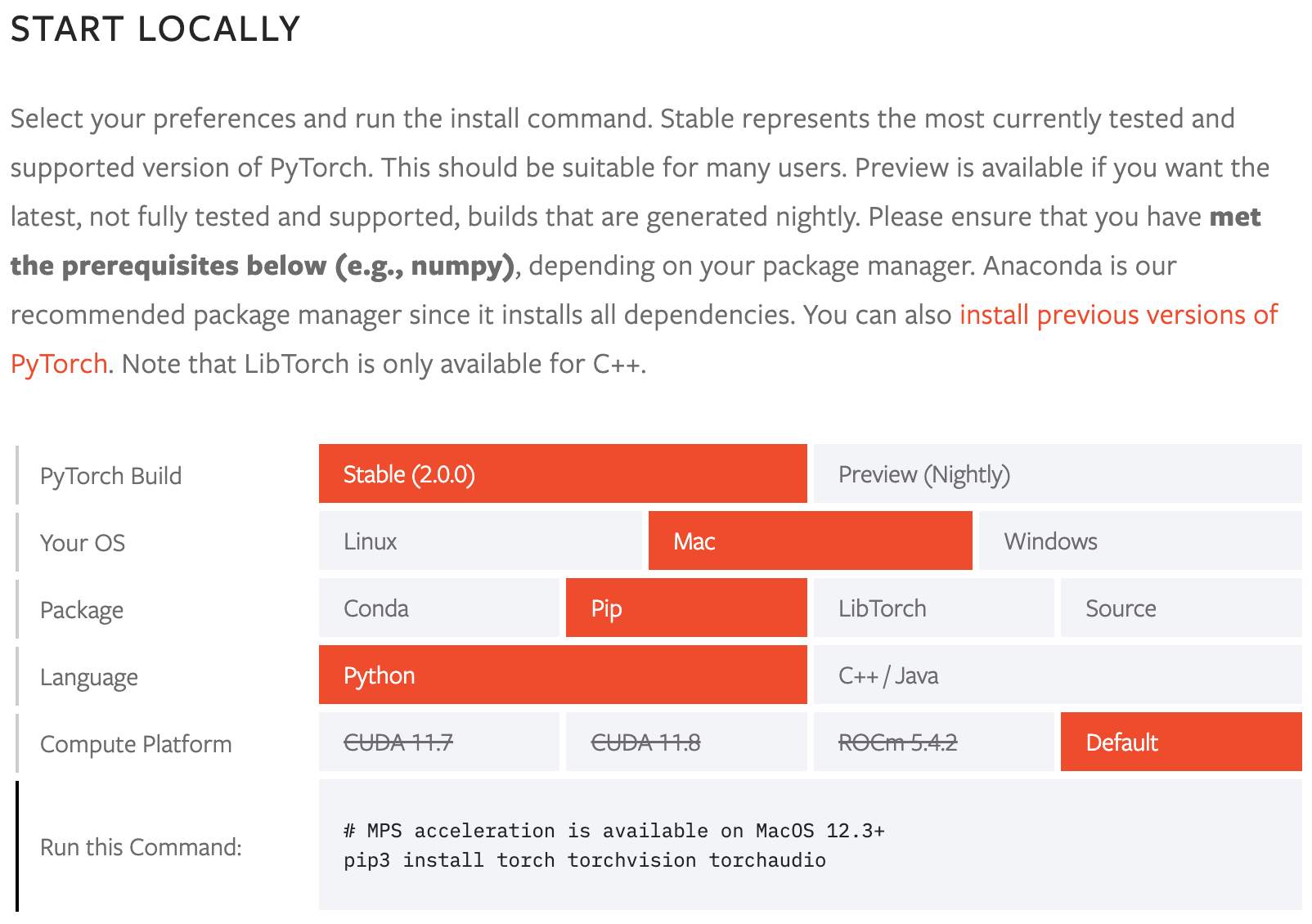

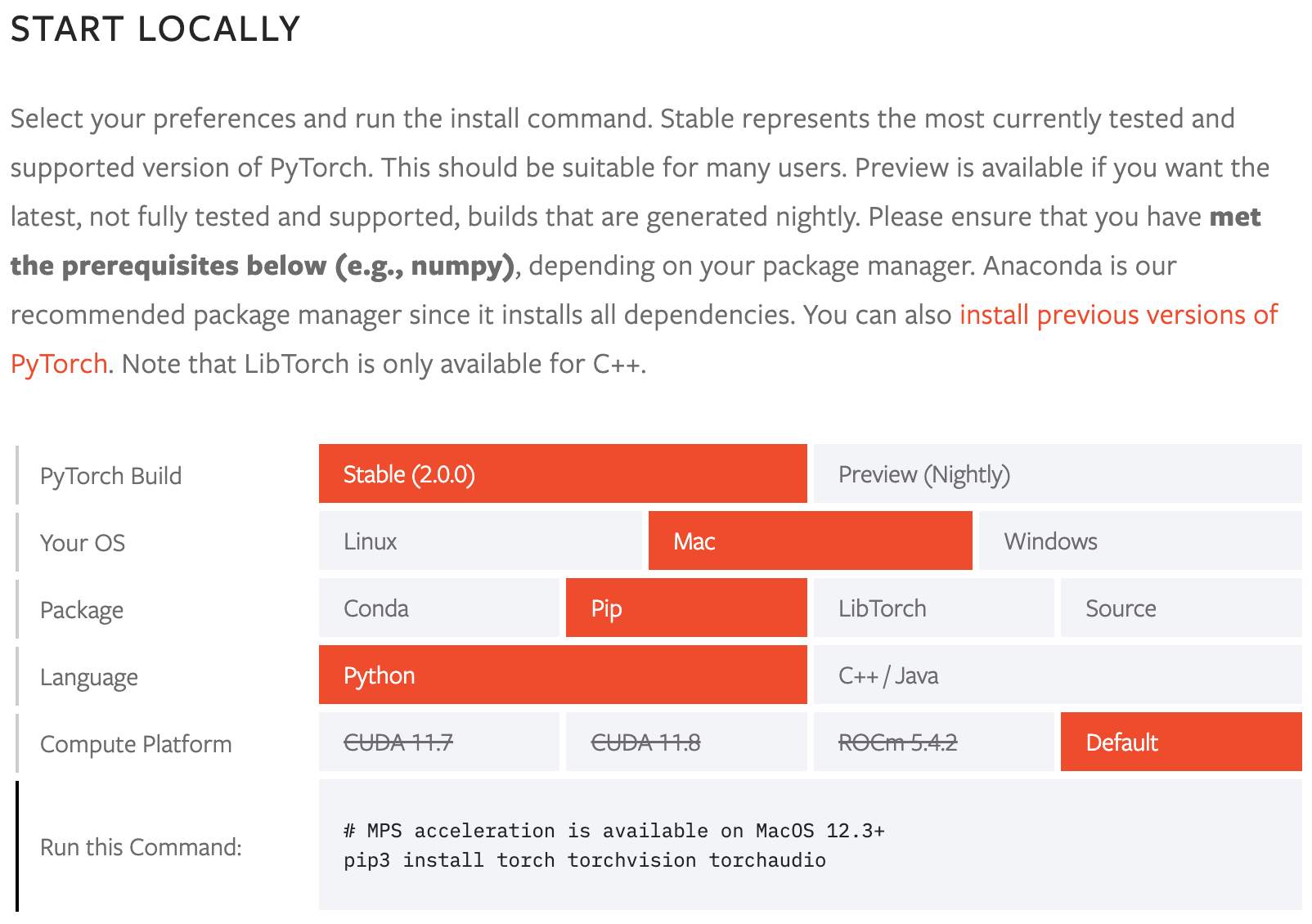

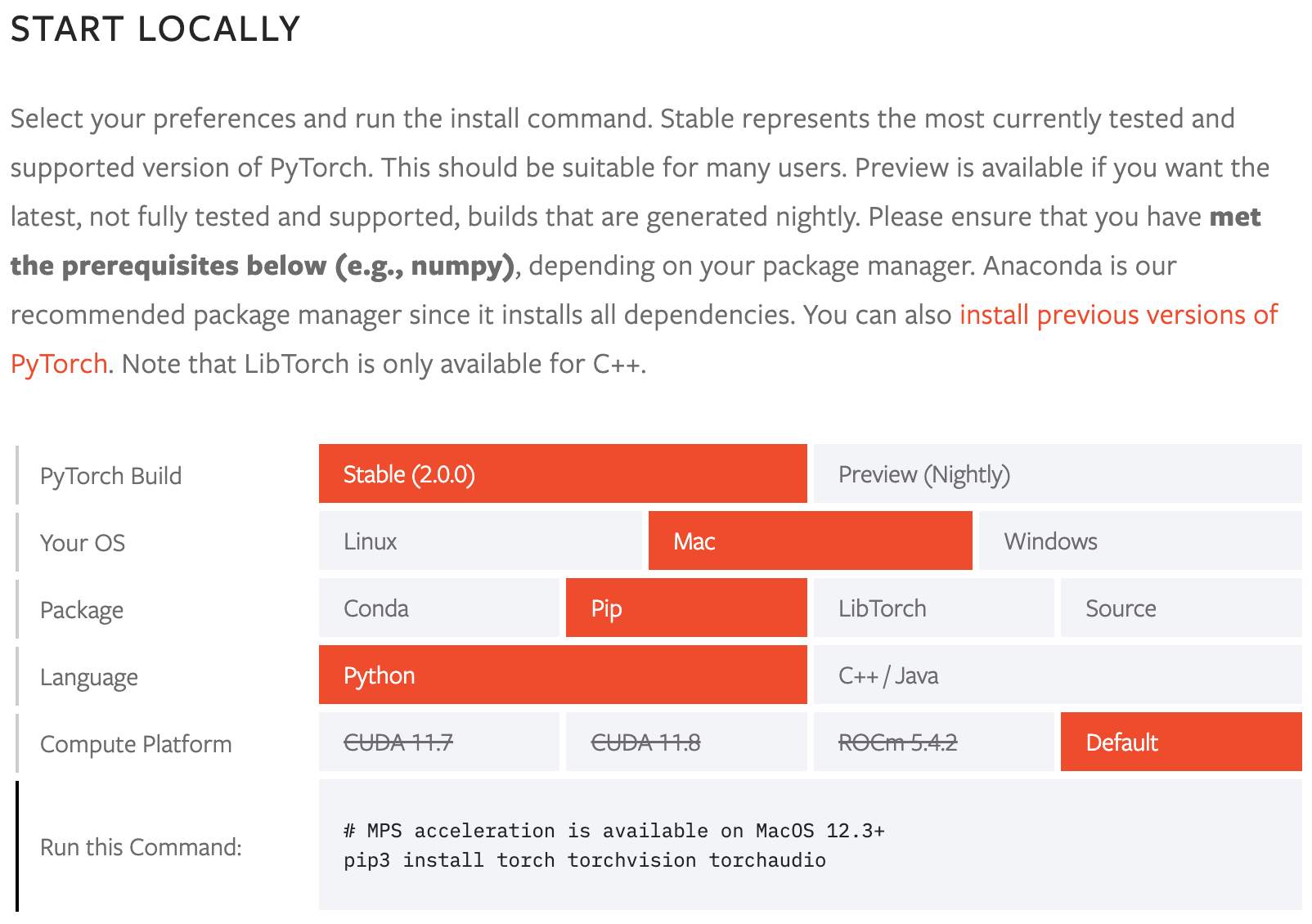

+ +Pip install the ultralytics package including all [requirements](https://github.com/ultralytics/ultralytics/blob/main/requirements.txt) in a [**Python>=3.8**](https://www.python.org/) environment with [**PyTorch>=1.8**](https://pytorch.org/get-started/locally/). + +[](https://badge.fury.io/py/ultralytics) [](https://pepy.tech/project/ultralytics) + +```bash +pip install ultralytics +``` + +For alternative installation methods including [Conda](https://anaconda.org/conda-forge/ultralytics), [Docker](https://hub.docker.com/r/ultralytics/ultralytics), and Git, please refer to the [Quickstart Guide](https://docs.ultralytics.com/quickstart). + +Usage

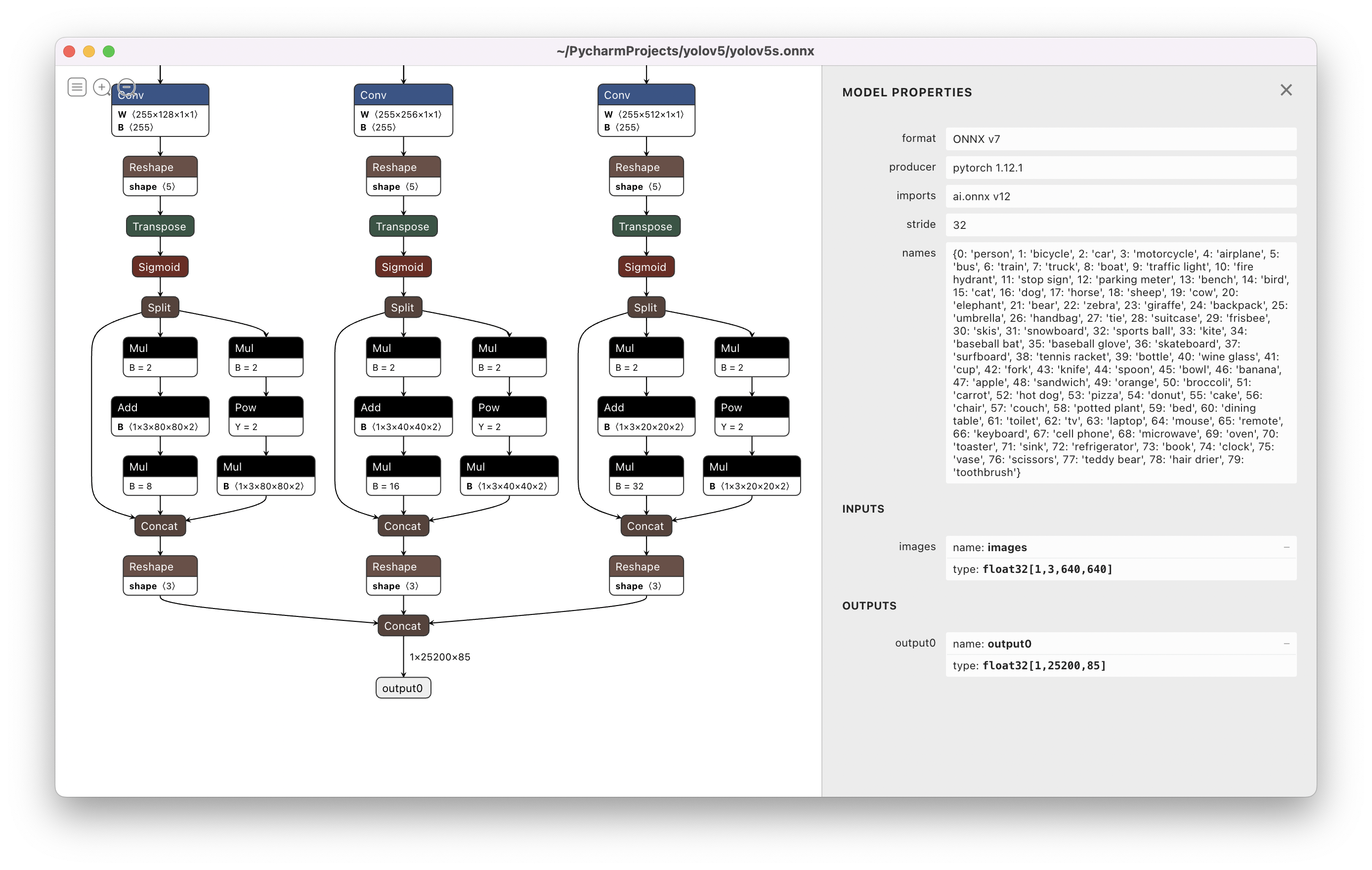

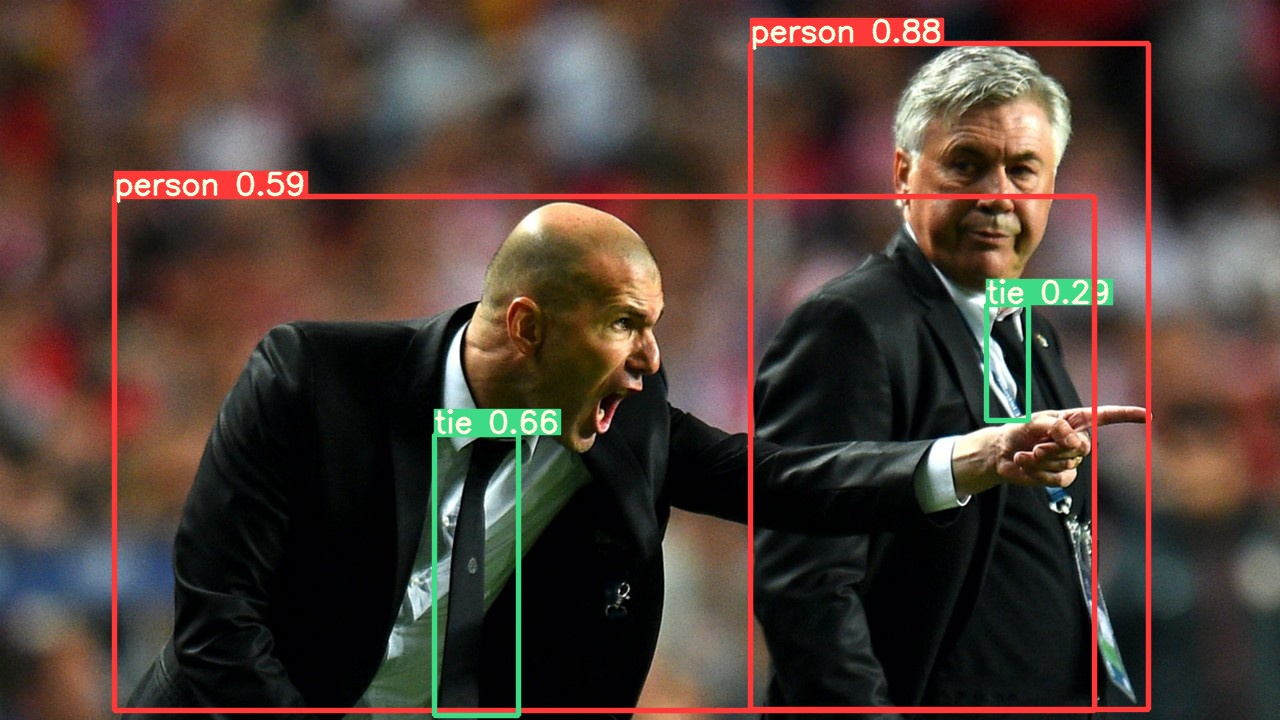

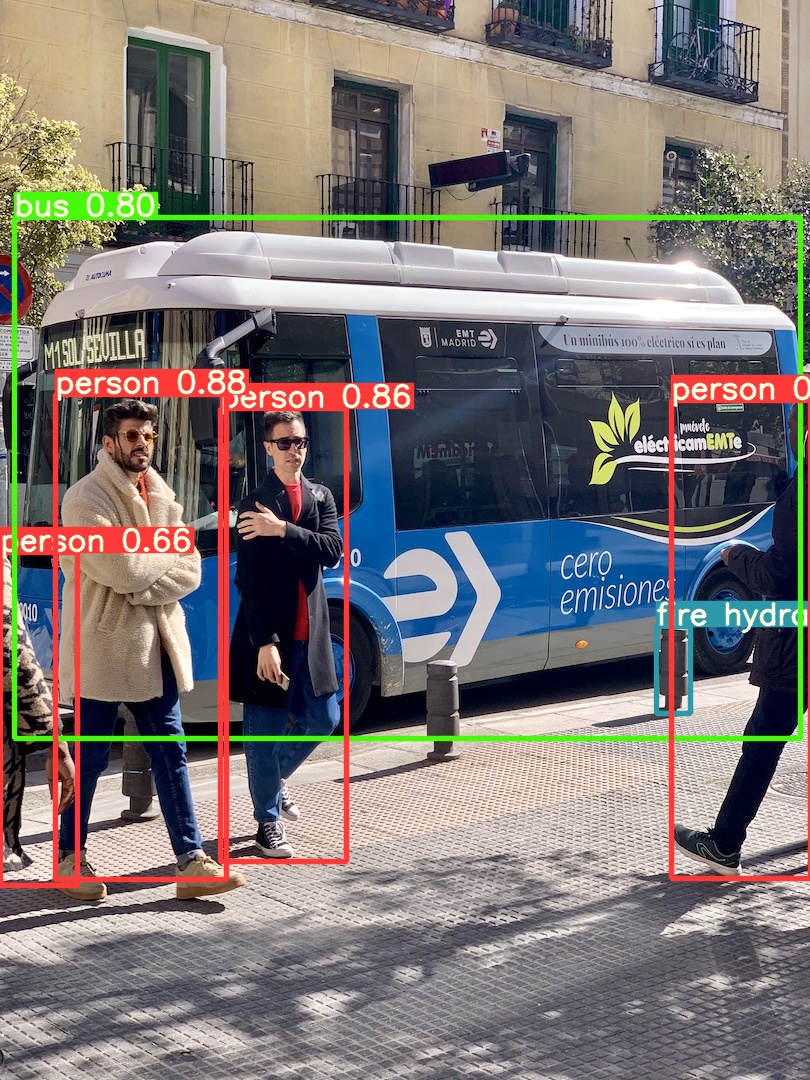

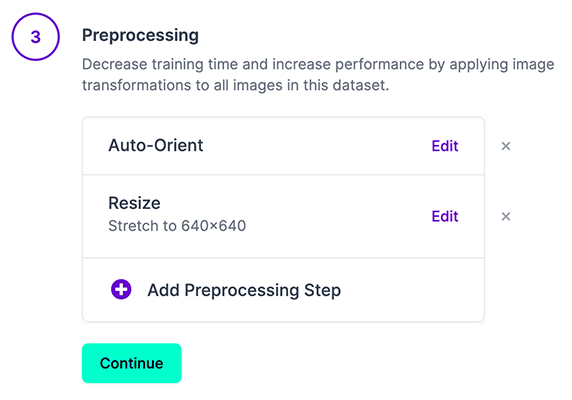

+ +#### CLI + +YOLOv8 may be used directly in the Command Line Interface (CLI) with a `yolo` command: + +```bash +yolo predict model=yolov8n.pt source='https://ultralytics.com/images/bus.jpg' +``` + +`yolo` can be used for a variety of tasks and modes and accepts additional arguments, i.e. `imgsz=640`. See the YOLOv8 [CLI Docs](https://docs.ultralytics.com/usage/cli) for examples. + +#### Python + +YOLOv8 may also be used directly in a Python environment, and accepts the same [arguments](https://docs.ultralytics.com/usage/cfg/) as in the CLI example above: + +```python +from ultralytics import YOLO + +# Load a model +model = YOLO("yolov8n.yaml") # build a new model from scratch +model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training) + +# Use the model +model.train(data="coco128.yaml", epochs=3) # train the model +metrics = model.val() # evaluate model performance on the validation set +results = model("https://ultralytics.com/images/bus.jpg") # predict on an image +path = model.export(format="onnx") # export the model to ONNX format +``` + +See YOLOv8 [Python Docs](https://docs.ultralytics.com/usage/python) for more examples. + + +

+All [Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models) download automatically from the latest Ultralytics [release](https://github.com/ultralytics/assets/releases) on first use.

+

+

+

+All [Models](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models) download automatically from the latest Ultralytics [release](https://github.com/ultralytics/assets/releases) on first use.

+

+Detection (COCO)

+ +See [Detection Docs](https://docs.ultralytics.com/tasks/detect/) for usage examples with these models trained on [COCO](https://docs.ultralytics.com/datasets/detect/coco/), which include 80 pre-trained classes. + +| Model | size(pixels) | mAPval

50-95 | Speed

CPU ONNX

(ms) | Speed

A100 TensorRT

(ms) | params

(M) | FLOPs

(B) | +| ------------------------------------------------------------------------------------ | --------------------- | -------------------- | ------------------------------ | ----------------------------------- | ------------------ | ----------------- | +| [YOLOv8n](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n.pt) | 640 | 37.3 | 80.4 | 0.99 | 3.2 | 8.7 | +| [YOLOv8s](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8s.pt) | 640 | 44.9 | 128.4 | 1.20 | 11.2 | 28.6 | +| [YOLOv8m](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8m.pt) | 640 | 50.2 | 234.7 | 1.83 | 25.9 | 78.9 | +| [YOLOv8l](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8l.pt) | 640 | 52.9 | 375.2 | 2.39 | 43.7 | 165.2 | +| [YOLOv8x](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x.pt) | 640 | 53.9 | 479.1 | 3.53 | 68.2 | 257.8 | + +- **mAPval** values are for single-model single-scale on [COCO val2017](http://cocodataset.org) dataset.

Reproduce by `yolo val detect data=coco.yaml device=0` +- **Speed** averaged over COCO val images using an [Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/) instance.

Reproduce by `yolo val detect data=coco.yaml batch=1 device=0|cpu` + +

Detection (Open Image V7)

+ +See [Detection Docs](https://docs.ultralytics.com/tasks/detect/) for usage examples with these models trained on [Open Image V7](https://docs.ultralytics.com/datasets/detect/open-images-v7/), which include 600 pre-trained classes. + +| Model | size(pixels) | mAPval

50-95 | Speed

CPU ONNX

(ms) | Speed

A100 TensorRT

(ms) | params

(M) | FLOPs

(B) | +| ----------------------------------------------------------------------------------------- | --------------------- | -------------------- | ------------------------------ | ----------------------------------- | ------------------ | ----------------- | +| [YOLOv8n](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n-oiv7.pt) | 640 | 18.4 | 142.4 | 1.21 | 3.5 | 10.5 | +| [YOLOv8s](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8s-oiv7.pt) | 640 | 27.7 | 183.1 | 1.40 | 11.4 | 29.7 | +| [YOLOv8m](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8m-oiv7.pt) | 640 | 33.6 | 408.5 | 2.26 | 26.2 | 80.6 | +| [YOLOv8l](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8l-oiv7.pt) | 640 | 34.9 | 596.9 | 2.43 | 44.1 | 167.4 | +| [YOLOv8x](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x-oiv7.pt) | 640 | 36.3 | 860.6 | 3.56 | 68.7 | 260.6 | + +- **mAPval** values are for single-model single-scale on [Open Image V7](https://docs.ultralytics.com/datasets/detect/open-images-v7/) dataset.

Reproduce by `yolo val detect data=open-images-v7.yaml device=0` +- **Speed** averaged over Open Image V7 val images using an [Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/) instance.

Reproduce by `yolo val detect data=open-images-v7.yaml batch=1 device=0|cpu` + +

Segmentation (COCO)

+ +See [Segmentation Docs](https://docs.ultralytics.com/tasks/segment/) for usage examples with these models trained on [COCO-Seg](https://docs.ultralytics.com/datasets/segment/coco/), which include 80 pre-trained classes. + +| Model | size(pixels) | mAPbox

50-95 | mAPmask

50-95 | Speed

CPU ONNX

(ms) | Speed

A100 TensorRT

(ms) | params

(M) | FLOPs

(B) | +| -------------------------------------------------------------------------------------------- | --------------------- | -------------------- | --------------------- | ------------------------------ | ----------------------------------- | ------------------ | ----------------- | +| [YOLOv8n-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n-seg.pt) | 640 | 36.7 | 30.5 | 96.1 | 1.21 | 3.4 | 12.6 | +| [YOLOv8s-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8s-seg.pt) | 640 | 44.6 | 36.8 | 155.7 | 1.47 | 11.8 | 42.6 | +| [YOLOv8m-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8m-seg.pt) | 640 | 49.9 | 40.8 | 317.0 | 2.18 | 27.3 | 110.2 | +| [YOLOv8l-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8l-seg.pt) | 640 | 52.3 | 42.6 | 572.4 | 2.79 | 46.0 | 220.5 | +| [YOLOv8x-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x-seg.pt) | 640 | 53.4 | 43.4 | 712.1 | 4.02 | 71.8 | 344.1 | + +- **mAPval** values are for single-model single-scale on [COCO val2017](http://cocodataset.org) dataset.

Reproduce by `yolo val segment data=coco-seg.yaml device=0` +- **Speed** averaged over COCO val images using an [Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/) instance.

Reproduce by `yolo val segment data=coco-seg.yaml batch=1 device=0|cpu` + +

Pose (COCO)

+ +See [Pose Docs](https://docs.ultralytics.com/tasks/pose/) for usage examples with these models trained on [COCO-Pose](https://docs.ultralytics.com/datasets/pose/coco/), which include 1 pre-trained class, person. + +| Model | size(pixels) | mAPpose

50-95 | mAPpose

50 | Speed

CPU ONNX

(ms) | Speed

A100 TensorRT

(ms) | params

(M) | FLOPs

(B) | +| ---------------------------------------------------------------------------------------------------- | --------------------- | --------------------- | ------------------ | ------------------------------ | ----------------------------------- | ------------------ | ----------------- | +| [YOLOv8n-pose](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n-pose.pt) | 640 | 50.4 | 80.1 | 131.8 | 1.18 | 3.3 | 9.2 | +| [YOLOv8s-pose](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8s-pose.pt) | 640 | 60.0 | 86.2 | 233.2 | 1.42 | 11.6 | 30.2 | +| [YOLOv8m-pose](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8m-pose.pt) | 640 | 65.0 | 88.8 | 456.3 | 2.00 | 26.4 | 81.0 | +| [YOLOv8l-pose](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8l-pose.pt) | 640 | 67.6 | 90.0 | 784.5 | 2.59 | 44.4 | 168.6 | +| [YOLOv8x-pose](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x-pose.pt) | 640 | 69.2 | 90.2 | 1607.1 | 3.73 | 69.4 | 263.2 | +| [YOLOv8x-pose-p6](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x-pose-p6.pt) | 1280 | 71.6 | 91.2 | 4088.7 | 10.04 | 99.1 | 1066.4 | + +- **mAPval** values are for single-model single-scale on [COCO Keypoints val2017](http://cocodataset.org) dataset.

Reproduce by `yolo val pose data=coco-pose.yaml device=0` +- **Speed** averaged over COCO val images using an [Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/) instance.

Reproduce by `yolo val pose data=coco-pose.yaml batch=1 device=0|cpu` + +

Classification (ImageNet)

+ +See [Classification Docs](https://docs.ultralytics.com/tasks/classify/) for usage examples with these models trained on [ImageNet](https://docs.ultralytics.com/datasets/classify/imagenet/), which include 1000 pretrained classes. + +| Model | size(pixels) | acc

top1 | acc

top5 | Speed

CPU ONNX

(ms) | Speed

A100 TensorRT

(ms) | params

(M) | FLOPs

(B) at 640 | +| -------------------------------------------------------------------------------------------- | --------------------- | ---------------- | ---------------- | ------------------------------ | ----------------------------------- | ------------------ | ------------------------ | +| [YOLOv8n-cls](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n-cls.pt) | 224 | 66.6 | 87.0 | 12.9 | 0.31 | 2.7 | 4.3 | +| [YOLOv8s-cls](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8s-cls.pt) | 224 | 72.3 | 91.1 | 23.4 | 0.35 | 6.4 | 13.5 | +| [YOLOv8m-cls](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8m-cls.pt) | 224 | 76.4 | 93.2 | 85.4 | 0.62 | 17.0 | 42.7 | +| [YOLOv8l-cls](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8l-cls.pt) | 224 | 78.0 | 94.1 | 163.0 | 0.87 | 37.5 | 99.7 | +| [YOLOv8x-cls](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x-cls.pt) | 224 | 78.4 | 94.3 | 232.0 | 1.01 | 57.4 | 154.8 | + +- **acc** values are model accuracies on the [ImageNet](https://www.image-net.org/) dataset validation set.

Reproduce by `yolo val classify data=path/to/ImageNet device=0` +- **Speed** averaged over ImageNet val images using an [Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/) instance.

Reproduce by `yolo val classify data=path/to/ImageNet batch=1 device=0|cpu` + +

+ +

+

++

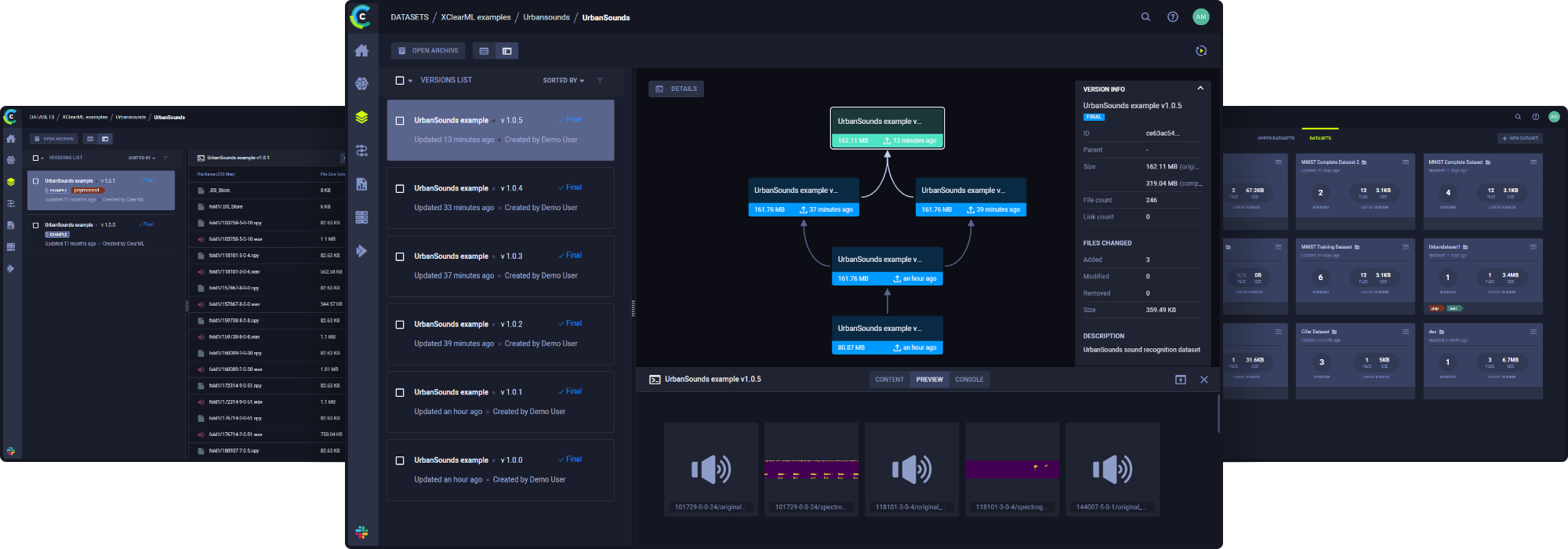

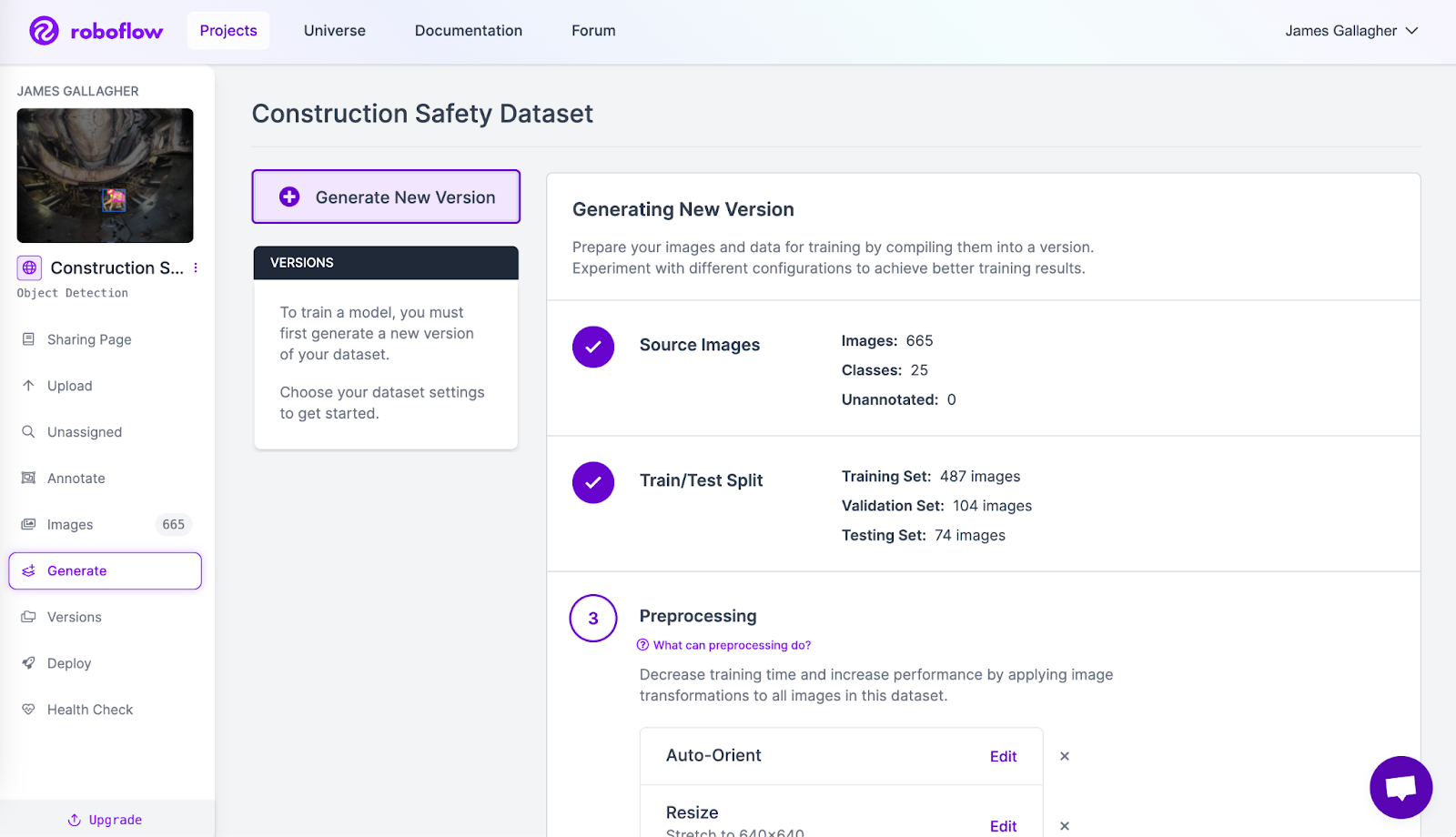

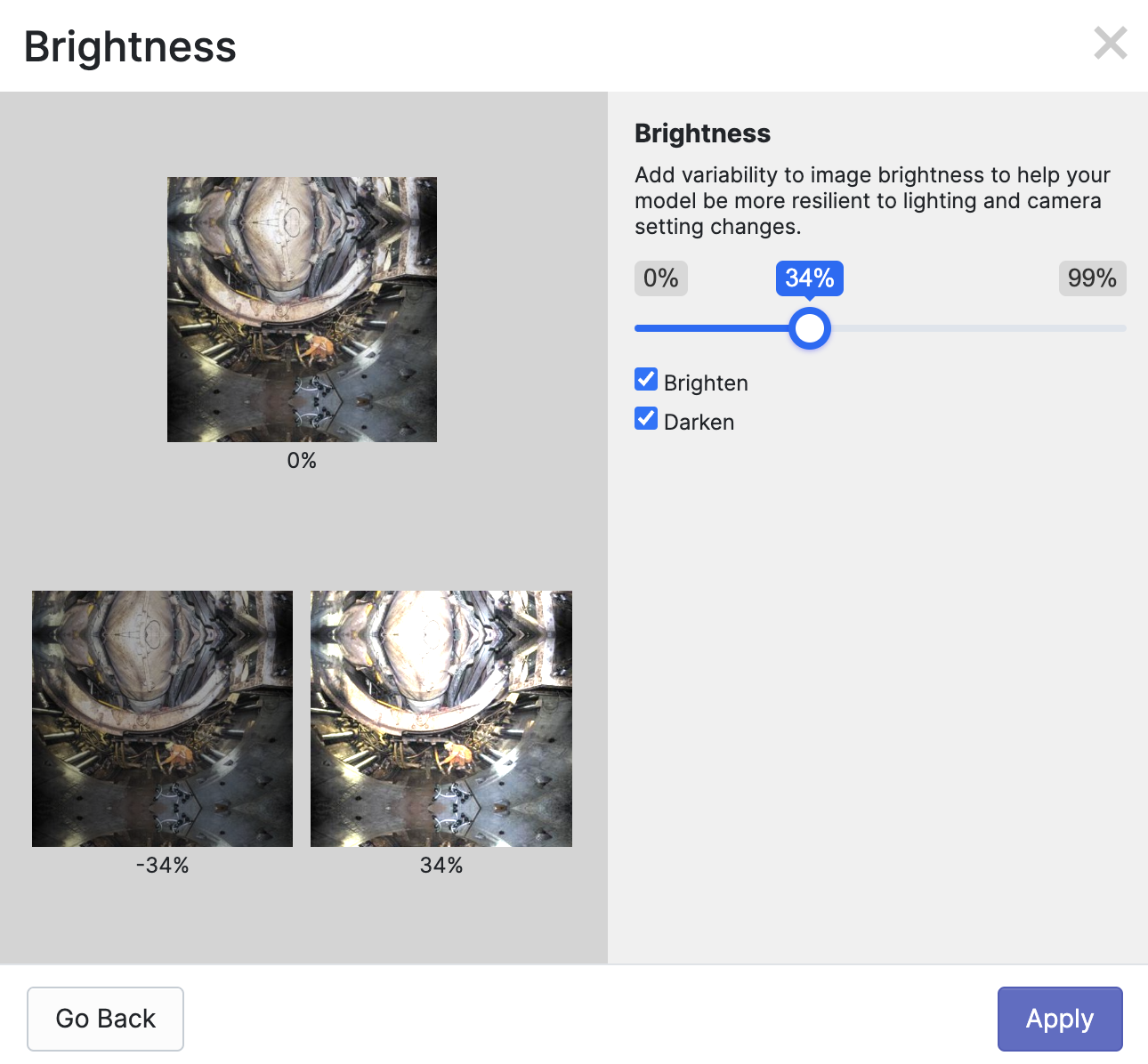

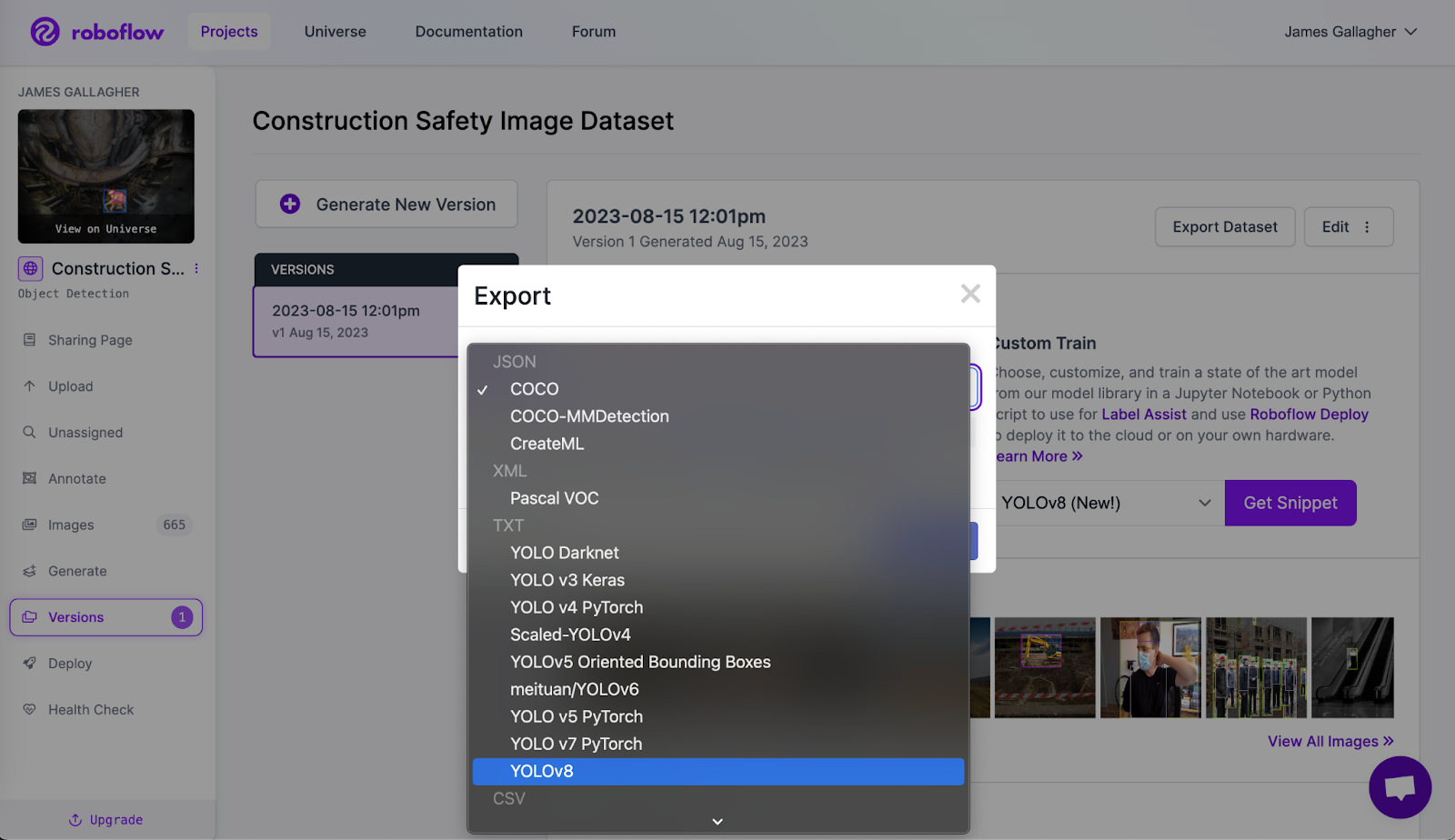

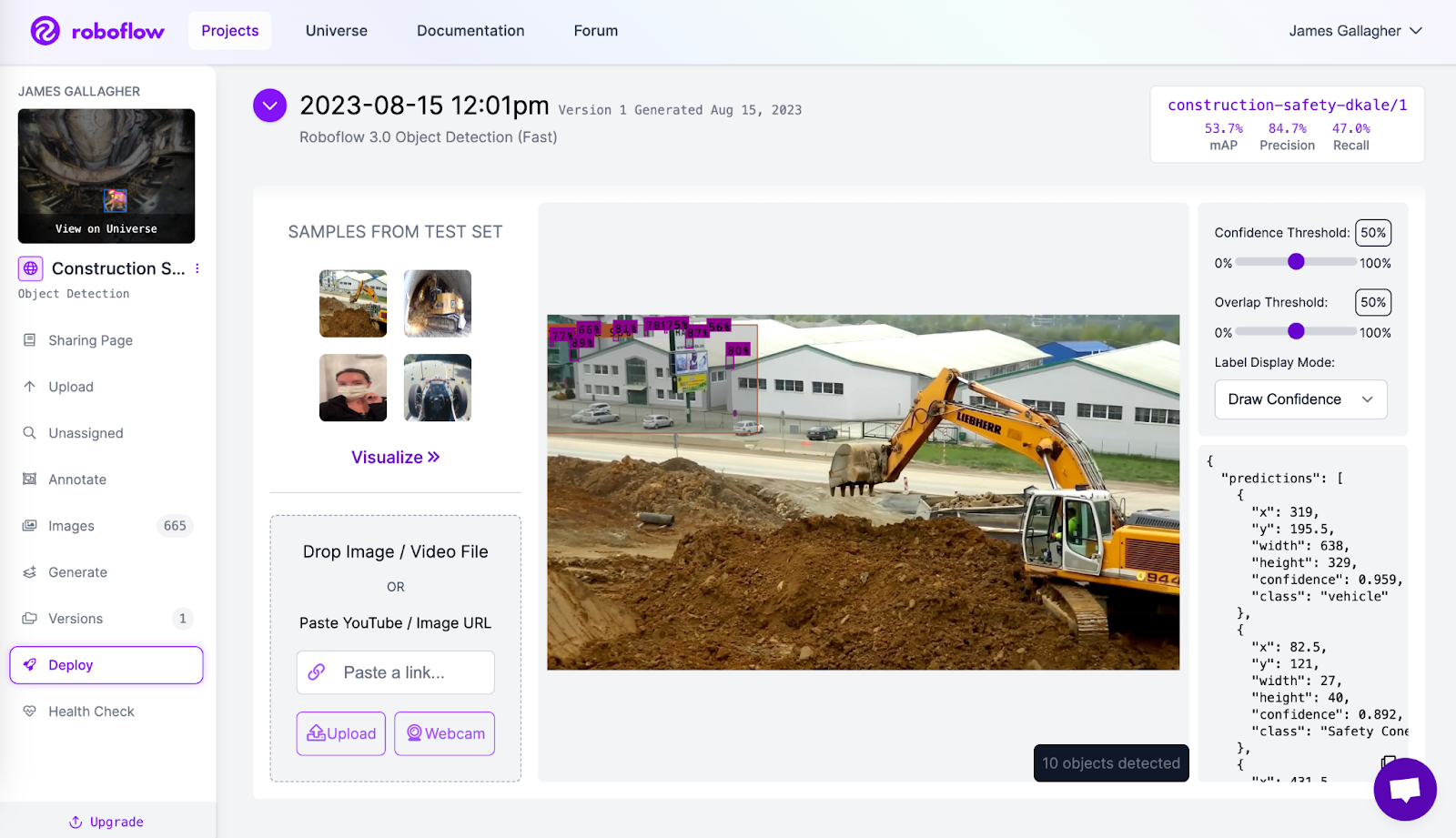

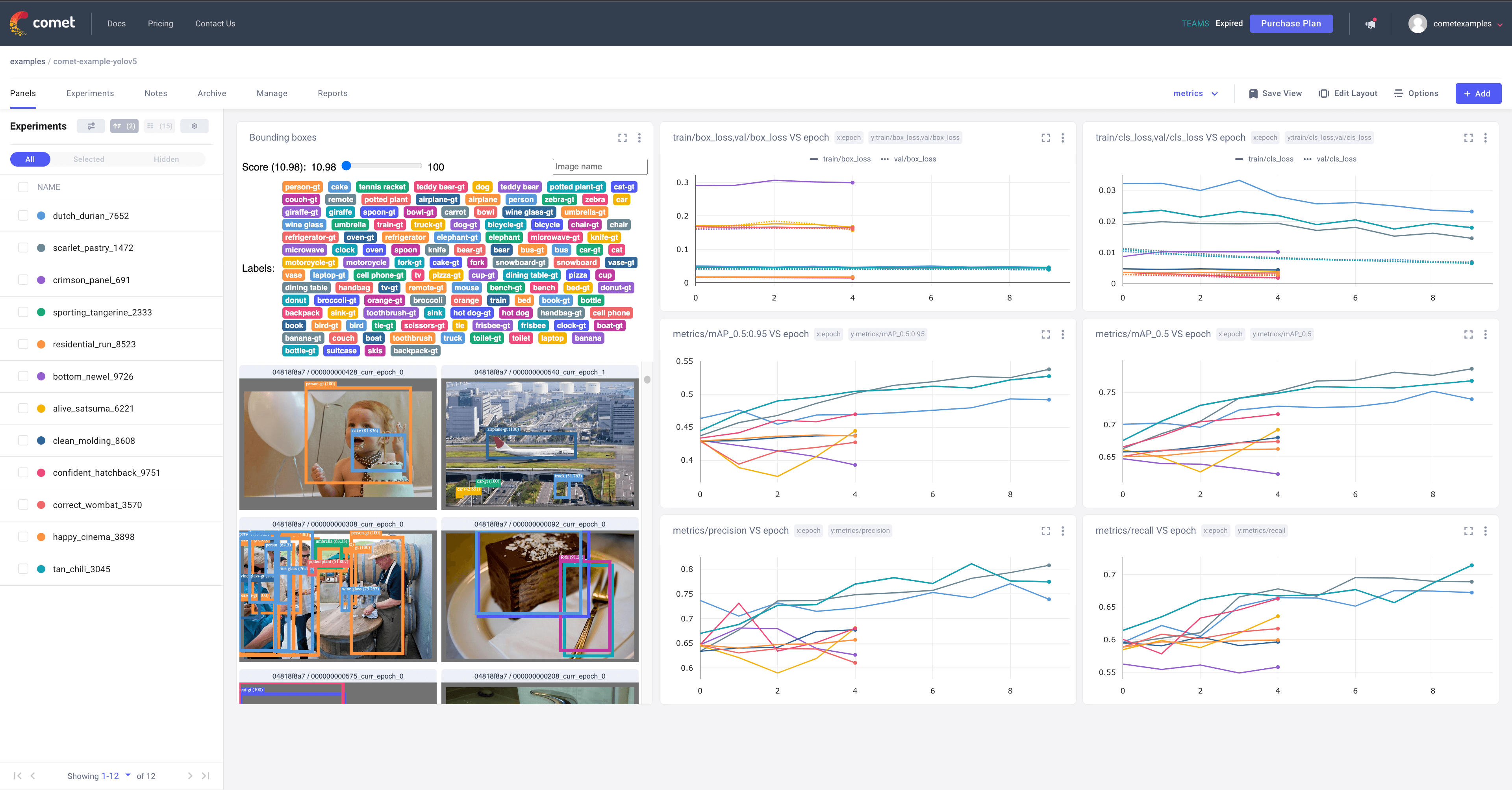

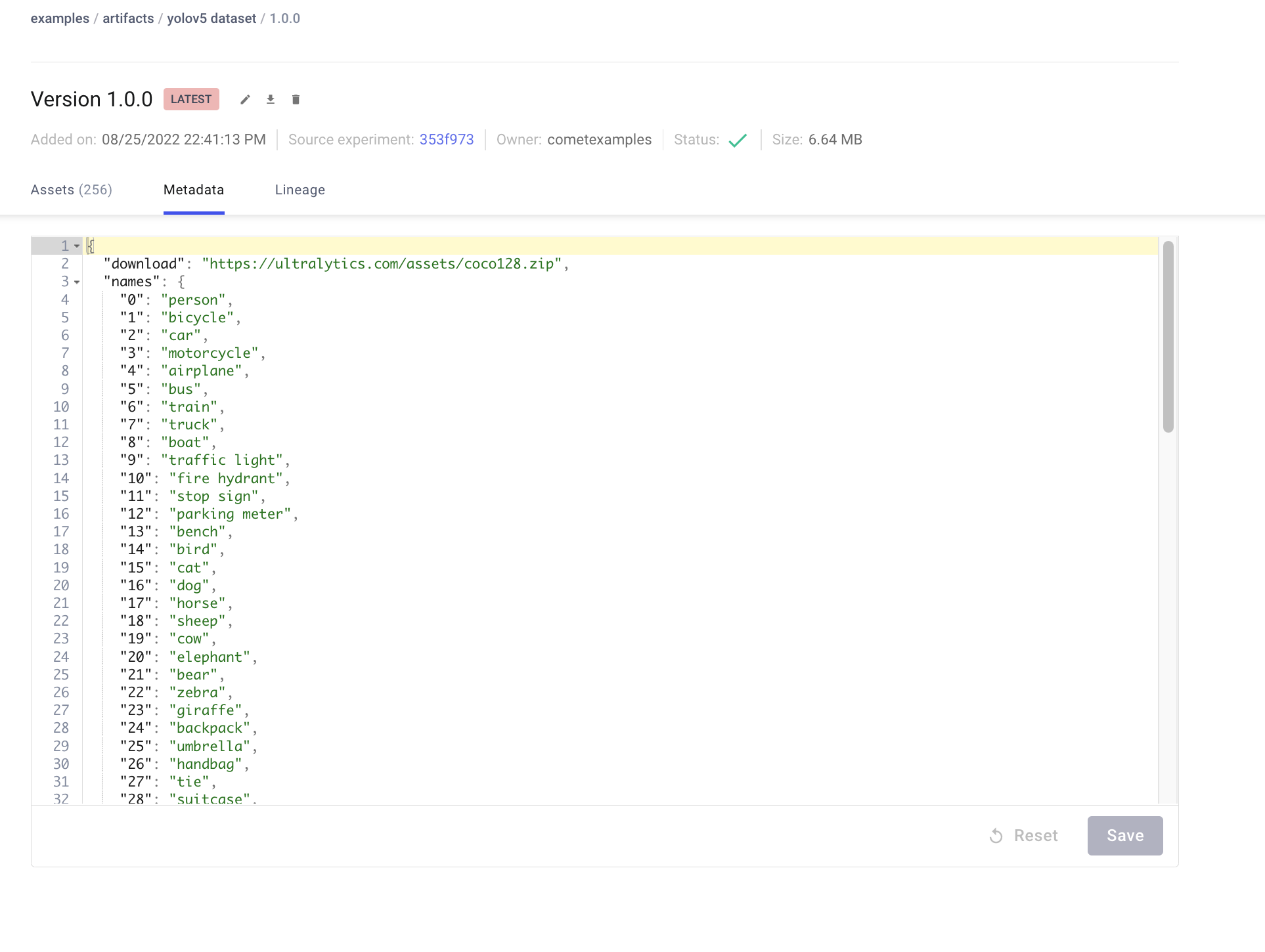

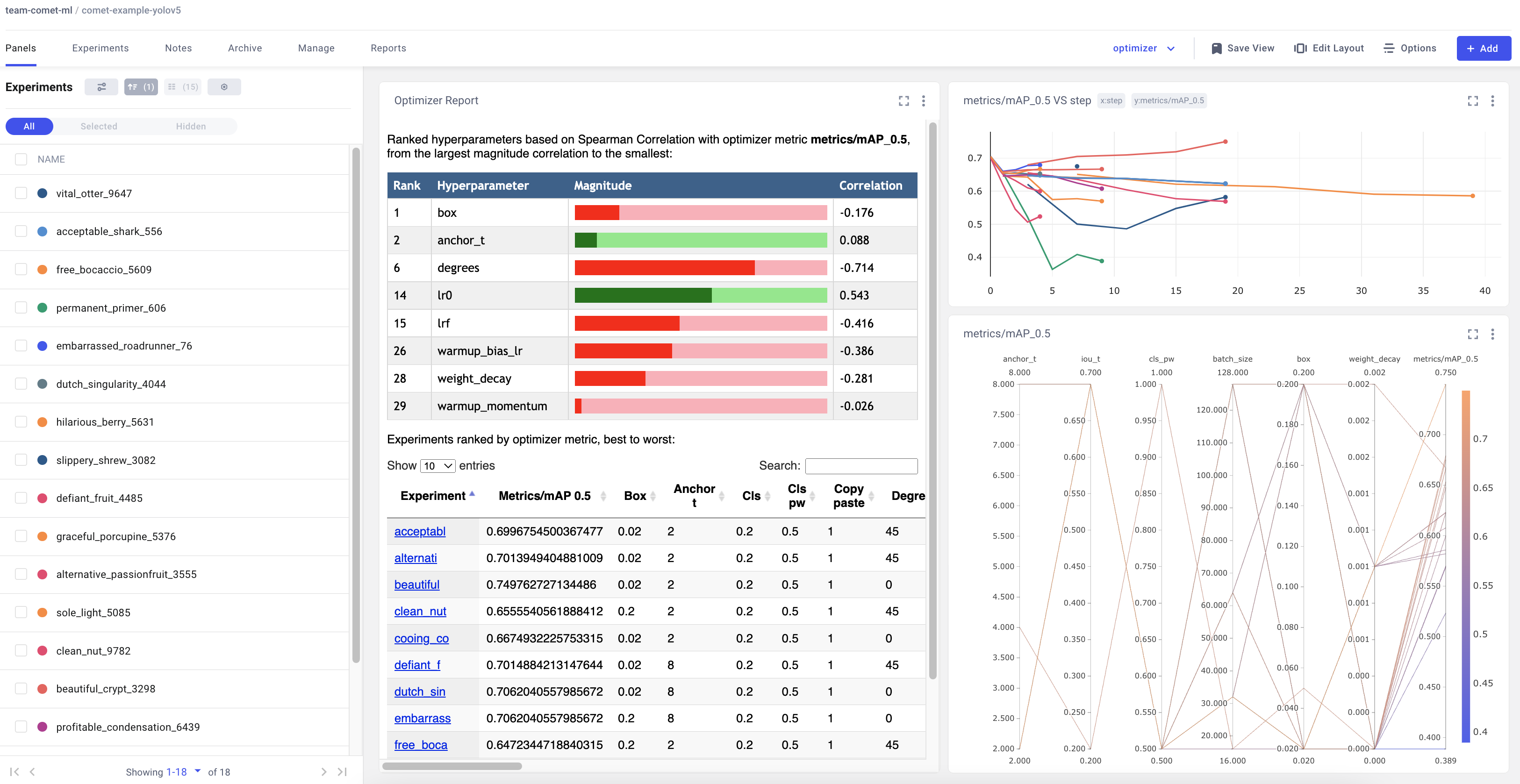

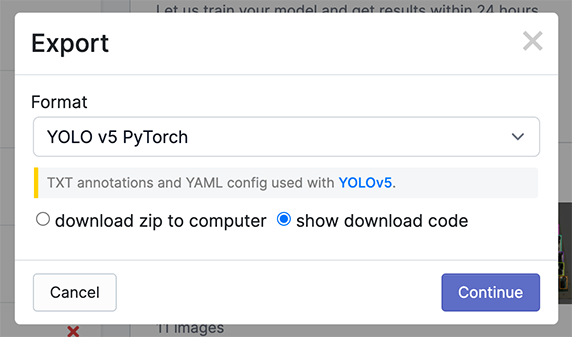

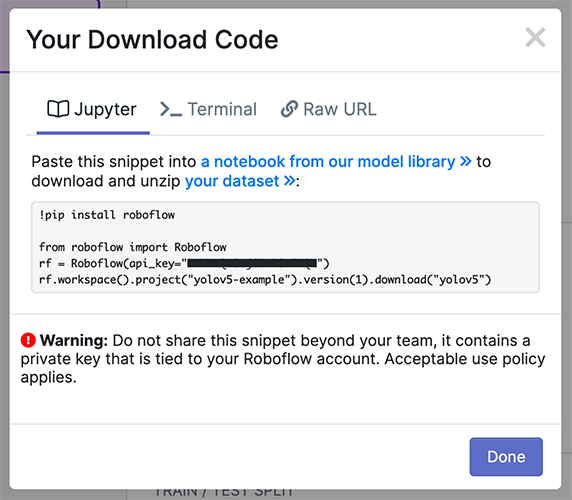

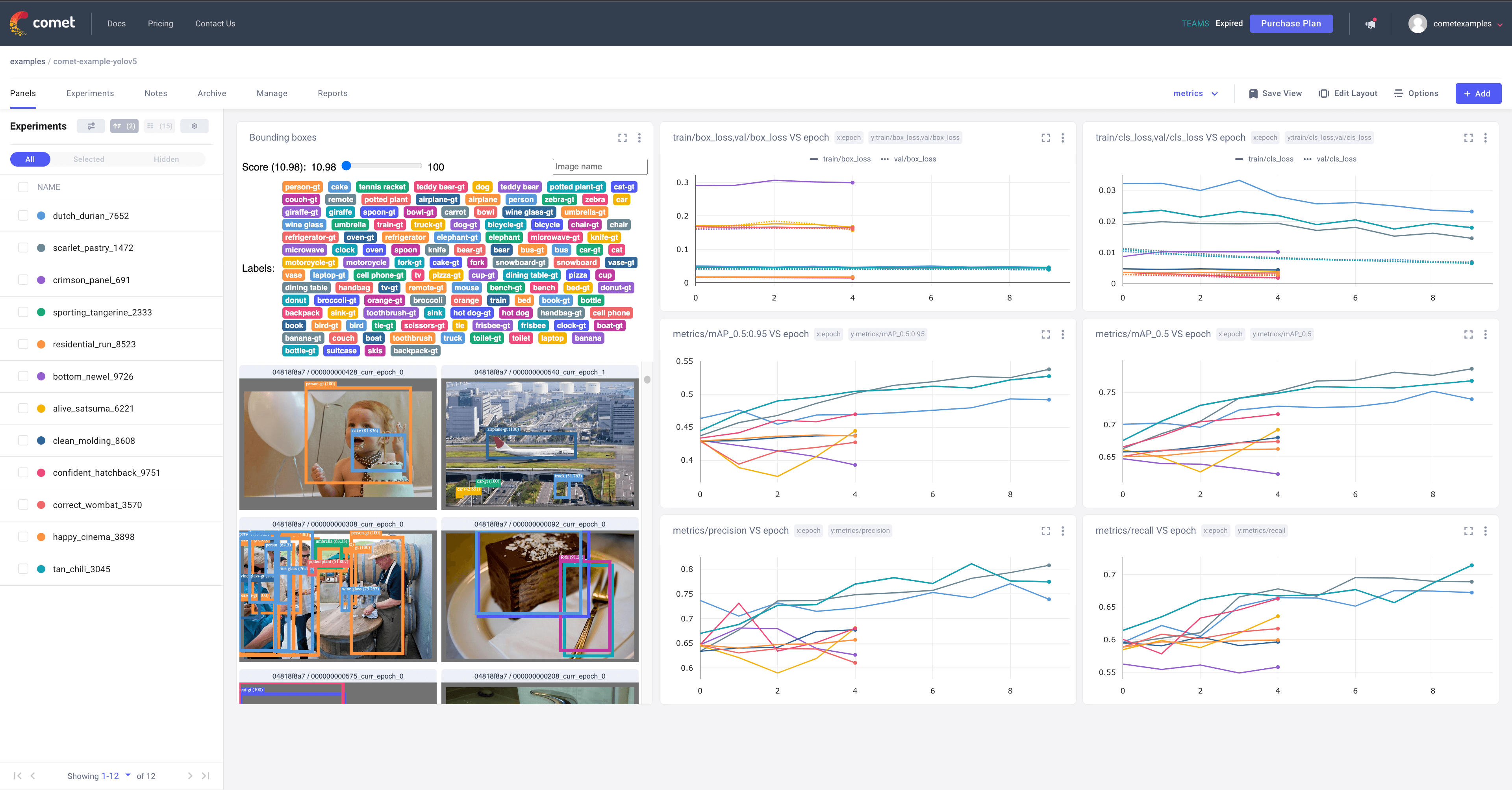

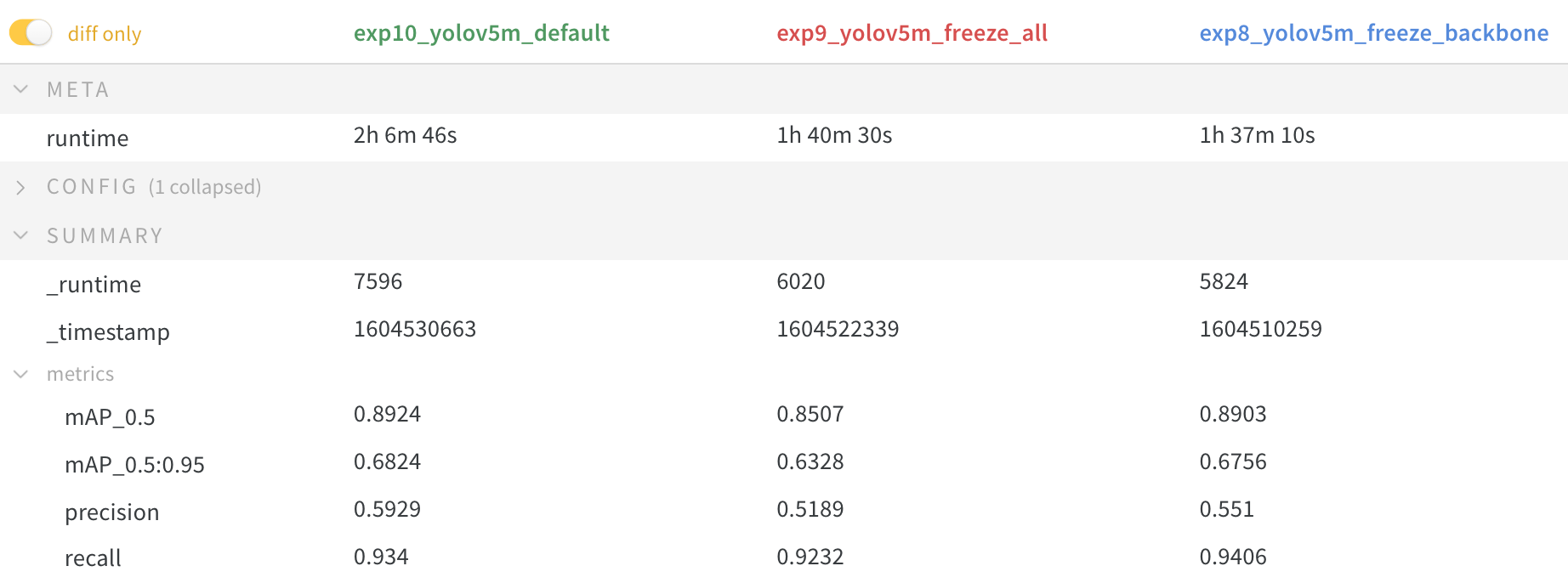

+ + + +| Roboflow | ClearML ⭐ NEW | Comet ⭐ NEW | Neural Magic ⭐ NEW | +| :--------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------: | +| Label and export your custom datasets directly to YOLOv8 for training with [Roboflow](https://roboflow.com/?ref=ultralytics) | Automatically track, visualize and even remotely train YOLOv8 using [ClearML](https://cutt.ly/yolov5-readme-clearml) (open-source!) | Free forever, [Comet](https://bit.ly/yolov8-readme-comet) lets you save YOLOv8 models, resume training, and interactively visualize and debug predictions | Run YOLOv8 inference up to 6x faster with [Neural Magic DeepSparse](https://bit.ly/yolov5-neuralmagic) | + +##

+

+##

+

+##  +

+##

+

+## + diff --git a/ultralytics/README.md:Zone.Identifier b/ultralytics/README.md:Zone.Identifier new file mode 100755 index 0000000..a45e1ac --- /dev/null +++ b/ultralytics/README.md:Zone.Identifier @@ -0,0 +1,2 @@ +[ZoneTransfer] +ZoneId=3 diff --git a/ultralytics/README.zh-CN.md b/ultralytics/README.zh-CN.md new file mode 100755 index 0000000..7a3bf9c --- /dev/null +++ b/ultralytics/README.zh-CN.md @@ -0,0 +1,265 @@ +

+

+  +

+

+ + +

+ +[Ultralytics](https://ultralytics.com) [YOLOv8](https://github.com/ultralytics/ultralytics) 是一款前沿、最先进(SOTA)的模型,基于先前 YOLO 版本的成功,引入了新功能和改进,进一步提升性能和灵活性。YOLOv8 设计快速、准确且易于使用,使其成为各种物体检测与跟踪、实例分割、图像分类和姿态估计任务的绝佳选择。 + +我们希望这里的资源能帮助您充分利用 YOLOv8。请浏览 YOLOv8 文档 了解详细信息,在 GitHub 上提交问题以获得支持,并加入我们的 Discord 社区进行问题和讨论! + +如需申请企业许可,请在 [Ultralytics Licensing](https://ultralytics.com/license) 处填写表格 + +

+

+

+

+

+

+安装

+ +使用Pip在一个[**Python>=3.8**](https://www.python.org/)环境中安装`ultralytics`包,此环境还需包含[**PyTorch>=1.8**](https://pytorch.org/get-started/locally/)。这也会安装所有必要的[依赖项](https://github.com/ultralytics/ultralytics/blob/main/requirements.txt)。 + +[](https://badge.fury.io/py/ultralytics) [](https://pepy.tech/project/ultralytics) + +```bash +pip install ultralytics +``` + +如需使用包括[Conda](https://anaconda.org/conda-forge/ultralytics)、[Docker](https://hub.docker.com/r/ultralytics/ultralytics)和Git在内的其他安装方法,请参考[快速入门指南](https://docs.ultralytics.com/quickstart)。 + +Usage

+ +#### CLI + +YOLOv8 可以在命令行界面(CLI)中直接使用,只需输入 `yolo` 命令: + +```bash +yolo predict model=yolov8n.pt source='https://ultralytics.com/images/bus.jpg' +``` + +`yolo` 可用于各种任务和模式,并接受其他参数,例如 `imgsz=640`。查看 YOLOv8 [CLI 文档](https://docs.ultralytics.com/usage/cli)以获取示例。 + +#### Python + +YOLOv8 也可以在 Python 环境中直接使用,并接受与上述 CLI 示例中相同的[参数](https://docs.ultralytics.com/usage/cfg/): + +```python +from ultralytics import YOLO + +# 加载模型 +model = YOLO("yolov8n.yaml") # 从头开始构建新模型 +model = YOLO("yolov8n.pt") # 加载预训练模型(建议用于训练) + +# 使用模型 +model.train(data="coco128.yaml", epochs=3) # 训练模型 +metrics = model.val() # 在验证集上评估模型性能 +results = model("https://ultralytics.com/images/bus.jpg") # 对图像进行预测 +success = model.export(format="onnx") # 将模型导出为 ONNX 格式 +``` + +查看 YOLOv8 [Python 文档](https://docs.ultralytics.com/usage/python)以获取更多示例。 + + +

+所有[模型](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models)在首次使用时会自动从最新的Ultralytics [发布版本](https://github.com/ultralytics/assets/releases)下载。

+

+

+

+所有[模型](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models)在首次使用时会自动从最新的Ultralytics [发布版本](https://github.com/ultralytics/assets/releases)下载。

+

+检测 (COCO)

+ +查看[检测文档](https://docs.ultralytics.com/tasks/detect/)以获取这些在[COCO](https://docs.ultralytics.com/datasets/detect/coco/)上训练的模型的使用示例,其中包括80个预训练类别。 + +| 模型 | 尺寸(像素) | mAPval

50-95 | 速度

CPU ONNX

(ms) | 速度

A100 TensorRT

(ms) | 参数

(M) | FLOPs

(B) | +| ------------------------------------------------------------------------------------ | --------------- | -------------------- | --------------------------- | -------------------------------- | -------------- | ----------------- | +| [YOLOv8n](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n.pt) | 640 | 37.3 | 80.4 | 0.99 | 3.2 | 8.7 | +| [YOLOv8s](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8s.pt) | 640 | 44.9 | 128.4 | 1.20 | 11.2 | 28.6 | +| [YOLOv8m](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8m.pt) | 640 | 50.2 | 234.7 | 1.83 | 25.9 | 78.9 | +| [YOLOv8l](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8l.pt) | 640 | 52.9 | 375.2 | 2.39 | 43.7 | 165.2 | +| [YOLOv8x](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x.pt) | 640 | 53.9 | 479.1 | 3.53 | 68.2 | 257.8 | + +- **mAPval** 值是基于单模型单尺度在 [COCO val2017](http://cocodataset.org) 数据集上的结果。

通过 `yolo val detect data=coco.yaml device=0` 复现 +- **速度** 是使用 [Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/) 实例对 COCO val 图像进行平均计算的。

通过 `yolo val detect data=coco.yaml batch=1 device=0|cpu` 复现 + +

检测(Open Image V7)

+ +查看[检测文档](https://docs.ultralytics.com/tasks/detect/)以获取这些在[Open Image V7](https://docs.ultralytics.com/datasets/detect/open-images-v7/)上训练的模型的使用示例,其中包括600个预训练类别。 + +| 模型 | 尺寸(像素) | mAP验证

50-95 | 速度

CPU ONNX

(毫秒) | 速度

A100 TensorRT

(毫秒) | 参数

(M) | 浮点运算

(B) | +| ----------------------------------------------------------------------------------------- | --------------- | ------------------- | --------------------------- | -------------------------------- | -------------- | ---------------- | +| [YOLOv8n](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n-oiv7.pt) | 640 | 18.4 | 142.4 | 1.21 | 3.5 | 10.5 | +| [YOLOv8s](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8s-oiv7.pt) | 640 | 27.7 | 183.1 | 1.40 | 11.4 | 29.7 | +| [YOLOv8m](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8m-oiv7.pt) | 640 | 33.6 | 408.5 | 2.26 | 26.2 | 80.6 | +| [YOLOv8l](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8l-oiv7.pt) | 640 | 34.9 | 596.9 | 2.43 | 44.1 | 167.4 | +| [YOLOv8x](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x-oiv7.pt) | 640 | 36.3 | 860.6 | 3.56 | 68.7 | 260.6 | + +- **mAP验证** 值适用于在[Open Image V7](https://docs.ultralytics.com/datasets/detect/open-images-v7/)数据集上的单模型单尺度。

通过 `yolo val detect data=open-images-v7.yaml device=0` 以复现。 +- **速度** 在使用[Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/)实例对Open Image V7验证图像进行平均测算。

通过 `yolo val detect data=open-images-v7.yaml batch=1 device=0|cpu` 以复现。 + +

分割 (COCO)

+ +查看[分割文档](https://docs.ultralytics.com/tasks/segment/)以获取这些在[COCO-Seg](https://docs.ultralytics.com/datasets/segment/coco/)上训练的模型的使用示例,其中包括80个预训练类别。 + +| 模型 | 尺寸(像素) | mAPbox

50-95 | mAPmask

50-95 | 速度

CPU ONNX

(ms) | 速度

A100 TensorRT

(ms) | 参数

(M) | FLOPs

(B) | +| -------------------------------------------------------------------------------------------- | --------------- | -------------------- | --------------------- | --------------------------- | -------------------------------- | -------------- | ----------------- | +| [YOLOv8n-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n-seg.pt) | 640 | 36.7 | 30.5 | 96.1 | 1.21 | 3.4 | 12.6 | +| [YOLOv8s-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8s-seg.pt) | 640 | 44.6 | 36.8 | 155.7 | 1.47 | 11.8 | 42.6 | +| [YOLOv8m-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8m-seg.pt) | 640 | 49.9 | 40.8 | 317.0 | 2.18 | 27.3 | 110.2 | +| [YOLOv8l-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8l-seg.pt) | 640 | 52.3 | 42.6 | 572.4 | 2.79 | 46.0 | 220.5 | +| [YOLOv8x-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x-seg.pt) | 640 | 53.4 | 43.4 | 712.1 | 4.02 | 71.8 | 344.1 | + +- **mAPval** 值是基于单模型单尺度在 [COCO val2017](http://cocodataset.org) 数据集上的结果。

通过 `yolo val segment data=coco-seg.yaml device=0` 复现 +- **速度** 是使用 [Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/) 实例对 COCO val 图像进行平均计算的。

通过 `yolo val segment data=coco-seg.yaml batch=1 device=0|cpu` 复现 + +

姿态 (COCO)

+ +查看[姿态文档](https://docs.ultralytics.com/tasks/pose/)以获取这些在[COCO-Pose](https://docs.ultralytics.com/datasets/pose/coco/)上训练的模型的使用示例,其中包括1个预训练类别,即人。 + +| 模型 | 尺寸(像素) | mAPpose

50-95 | mAPpose

50 | 速度

CPU ONNX

(ms) | 速度

A100 TensorRT

(ms) | 参数

(M) | FLOPs

(B) | +| ---------------------------------------------------------------------------------------------------- | --------------- | --------------------- | ------------------ | --------------------------- | -------------------------------- | -------------- | ----------------- | +| [YOLOv8n-pose](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n-pose.pt) | 640 | 50.4 | 80.1 | 131.8 | 1.18 | 3.3 | 9.2 | +| [YOLOv8s-pose](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8s-pose.pt) | 640 | 60.0 | 86.2 | 233.2 | 1.42 | 11.6 | 30.2 | +| [YOLOv8m-pose](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8m-pose.pt) | 640 | 65.0 | 88.8 | 456.3 | 2.00 | 26.4 | 81.0 | +| [YOLOv8l-pose](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8l-pose.pt) | 640 | 67.6 | 90.0 | 784.5 | 2.59 | 44.4 | 168.6 | +| [YOLOv8x-pose](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x-pose.pt) | 640 | 69.2 | 90.2 | 1607.1 | 3.73 | 69.4 | 263.2 | +| [YOLOv8x-pose-p6](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x-pose-p6.pt) | 1280 | 71.6 | 91.2 | 4088.7 | 10.04 | 99.1 | 1066.4 | + +- **mAPval** 值是基于单模型单尺度在 [COCO Keypoints val2017](http://cocodataset.org) 数据集上的结果。

通过 `yolo val pose data=coco-pose.yaml device=0` 复现 +- **速度** 是使用 [Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/) 实例对 COCO val 图像进行平均计算的。

通过 `yolo val pose data=coco-pose.yaml batch=1 device=0|cpu` 复现 + +

分类 (ImageNet)

+ +查看[分类文档](https://docs.ultralytics.com/tasks/classify/)以获取这些在[ImageNet](https://docs.ultralytics.com/datasets/classify/imagenet/)上训练的模型的使用示例,其中包括1000个预训练类别。 + +| 模型 | 尺寸(像素) | acc

top1 | acc

top5 | 速度

CPU ONNX

(ms) | 速度

A100 TensorRT

(ms) | 参数

(M) | FLOPs

(B) at 640 | +| -------------------------------------------------------------------------------------------- | --------------- | ---------------- | ---------------- | --------------------------- | -------------------------------- | -------------- | ------------------------ | +| [YOLOv8n-cls](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n-cls.pt) | 224 | 66.6 | 87.0 | 12.9 | 0.31 | 2.7 | 4.3 | +| [YOLOv8s-cls](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8s-cls.pt) | 224 | 72.3 | 91.1 | 23.4 | 0.35 | 6.4 | 13.5 | +| [YOLOv8m-cls](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8m-cls.pt) | 224 | 76.4 | 93.2 | 85.4 | 0.62 | 17.0 | 42.7 | +| [YOLOv8l-cls](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8l-cls.pt) | 224 | 78.0 | 94.1 | 163.0 | 0.87 | 37.5 | 99.7 | +| [YOLOv8x-cls](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x-cls.pt) | 224 | 78.4 | 94.3 | 232.0 | 1.01 | 57.4 | 154.8 | + +- **acc** 值是模型在 [ImageNet](https://www.image-net.org/) 数据集验证集上的准确率。

通过 `yolo val classify data=path/to/ImageNet device=0` 复现 +- **速度** 是使用 [Amazon EC2 P4d](https://aws.amazon.com/ec2/instance-types/p4/) 实例对 ImageNet val 图像进行平均计算的。

通过 `yolo val classify data=path/to/ImageNet batch=1 device=0|cpu` 复现 + +

+ +

+

++

+ + + +| Roboflow | ClearML ⭐ NEW | Comet ⭐ NEW | Neural Magic ⭐ NEW | +| :--------------------------------------------------------------------------------: | :----------------------------------------------------------------------------: | :----------------------------------------------------------------------------------: | :-----------------------------------------------------------------------------------: | +| 使用 [Roboflow](https://roboflow.com/?ref=ultralytics) 将您的自定义数据集直接标记并导出至 YOLOv8 进行训练 | 使用 [ClearML](https://cutt.ly/yolov5-readme-clearml)(开源!)自动跟踪、可视化,甚至远程训练 YOLOv8 | 免费且永久,[Comet](https://bit.ly/yolov8-readme-comet) 让您保存 YOLOv8 模型、恢复训练,并以交互式方式查看和调试预测 | 使用 [Neural Magic DeepSparse](https://bit.ly/yolov5-neuralmagic) 使 YOLOv8 推理速度提高多达 6 倍 | + +##

+

+##

+

+##  +

+##

+

+## + diff --git a/ultralytics/README.zh-CN.md:Zone.Identifier b/ultralytics/README.zh-CN.md:Zone.Identifier new file mode 100755 index 0000000..a45e1ac --- /dev/null +++ b/ultralytics/README.zh-CN.md:Zone.Identifier @@ -0,0 +1,2 @@ +[ZoneTransfer] +ZoneId=3 diff --git a/ultralytics/bus.jpg b/ultralytics/bus.jpg new file mode 100755 index 0000000..b43e311 Binary files /dev/null and b/ultralytics/bus.jpg differ diff --git a/ultralytics/bus.jpg:Zone.Identifier b/ultralytics/bus.jpg:Zone.Identifier new file mode 100755 index 0000000..a45e1ac --- /dev/null +++ b/ultralytics/bus.jpg:Zone.Identifier @@ -0,0 +1,2 @@ +[ZoneTransfer] +ZoneId=3 diff --git a/ultralytics/docker/Dockerfile b/ultralytics/docker/Dockerfile new file mode 100755 index 0000000..e3a32c8 --- /dev/null +++ b/ultralytics/docker/Dockerfile @@ -0,0 +1,82 @@ +# Ultralytics YOLO 🚀, AGPL-3.0 license +# Builds ultralytics/ultralytics:latest image on DockerHub https://hub.docker.com/r/ultralytics/ultralytics +# Image is CUDA-optimized for YOLOv8 single/multi-GPU training and inference + +# Start FROM PyTorch image https://hub.docker.com/r/pytorch/pytorch or nvcr.io/nvidia/pytorch:23.03-py3 +FROM pytorch/pytorch:2.1.0-cuda12.1-cudnn8-runtime +RUN pip install --no-cache nvidia-tensorrt --index-url https://pypi.ngc.nvidia.com + +# Downloads to user config dir +ADD https://ultralytics.com/assets/Arial.ttf https://ultralytics.com/assets/Arial.Unicode.ttf /root/.config/Ultralytics/ + +# Install linux packages +# g++ required to build 'tflite_support' and 'lap' packages, libusb-1.0-0 required for 'tflite_support' package +RUN apt update \ + && apt install --no-install-recommends -y gcc git zip curl htop libgl1 libglib2.0-0 libpython3-dev gnupg g++ libusb-1.0-0 + +# Security updates +# https://security.snyk.io/vuln/SNYK-UBUNTU1804-OPENSSL-3314796 +RUN apt upgrade --no-install-recommends -y openssl tar + +# Create working directory +WORKDIR /usr/src/ultralytics + +# Copy contents +# COPY . /usr/src/ultralytics # git permission issues inside container +RUN git clone https://github.com/ultralytics/ultralytics -b main /usr/src/ultralytics +ADD https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n.pt /usr/src/ultralytics/ + +# Install pip packages +RUN python3 -m pip install --upgrade pip wheel +RUN pip install --no-cache -e ".[export]" albumentations comet pycocotools pytest-cov + +# Run exports to AutoInstall packages +RUN yolo export model=tmp/yolov8n.pt format=edgetpu imgsz=32 +RUN yolo export model=tmp/yolov8n.pt format=ncnn imgsz=32 +# Requires <= Python 3.10, bug with paddlepaddle==2.5.0 https://github.com/PaddlePaddle/X2Paddle/issues/991 +RUN pip install --no-cache paddlepaddle==2.4.2 x2paddle +# Fix error: `np.bool` was a deprecated alias for the builtin `bool` segmentation error in Tests +RUN pip install --no-cache numpy==1.23.5 +# Remove exported models +RUN rm -rf tmp + +# Set environment variables +ENV OMP_NUM_THREADS=1 +# Avoid DDP error "MKL_THREADING_LAYER=INTEL is incompatible with libgomp.so.1 library" https://github.com/pytorch/pytorch/issues/37377 +ENV MKL_THREADING_LAYER=GNU + + +# Usage Examples ------------------------------------------------------------------------------------------------------- + +# Build and Push +# t=ultralytics/ultralytics:latest && sudo docker build -f docker/Dockerfile -t $t . && sudo docker push $t + +# Pull and Run with access to all GPUs +# t=ultralytics/ultralytics:latest && sudo docker pull $t && sudo docker run -it --ipc=host --gpus all $t + +# Pull and Run with access to GPUs 2 and 3 (inside container CUDA devices will appear as 0 and 1) +# t=ultralytics/ultralytics:latest && sudo docker pull $t && sudo docker run -it --ipc=host --gpus '"device=2,3"' $t + +# Pull and Run with local directory access +# t=ultralytics/ultralytics:latest && sudo docker pull $t && sudo docker run -it --ipc=host --gpus all -v "$(pwd)"/datasets:/usr/src/datasets $t + +# Kill all +# sudo docker kill $(sudo docker ps -q) + +# Kill all image-based +# sudo docker kill $(sudo docker ps -qa --filter ancestor=ultralytics/ultralytics:latest) + +# DockerHub tag update +# t=ultralytics/ultralytics:latest tnew=ultralytics/ultralytics:v6.2 && sudo docker pull $t && sudo docker tag $t $tnew && sudo docker push $tnew + +# Clean up +# sudo docker system prune -a --volumes + +# Update Ubuntu drivers +# https://www.maketecheasier.com/install-nvidia-drivers-ubuntu/ + +# DDP test +# python -m torch.distributed.run --nproc_per_node 2 --master_port 1 train.py --epochs 3 + +# GCP VM from Image +# docker.io/ultralytics/ultralytics:latest diff --git a/ultralytics/docker/Dockerfile-arm64 b/ultralytics/docker/Dockerfile-arm64 new file mode 100755 index 0000000..aedb4f2 --- /dev/null +++ b/ultralytics/docker/Dockerfile-arm64 @@ -0,0 +1,44 @@ +# Ultralytics YOLO 🚀, AGPL-3.0 license +# Builds ultralytics/ultralytics:latest-arm64 image on DockerHub https://hub.docker.com/r/ultralytics/ultralytics +# Image is aarch64-compatible for Apple M1 and other ARM architectures i.e. Jetson Nano and Raspberry Pi + +# Start FROM Ubuntu image https://hub.docker.com/_/ubuntu +FROM arm64v8/ubuntu:22.04 + +# Downloads to user config dir +ADD https://ultralytics.com/assets/Arial.ttf https://ultralytics.com/assets/Arial.Unicode.ttf /root/.config/Ultralytics/ + +# Install linux packages +# g++ required to build 'tflite_support' and 'lap' packages, libusb-1.0-0 required for 'tflite_support' package +RUN apt update \ + && apt install --no-install-recommends -y python3-pip git zip curl htop gcc libgl1 libglib2.0-0 libpython3-dev gnupg g++ libusb-1.0-0 + +# Create working directory +WORKDIR /usr/src/ultralytics + +# Copy contents +# COPY . /usr/src/ultralytics # git permission issues inside container +RUN git clone https://github.com/ultralytics/ultralytics -b main /usr/src/ultralytics +ADD https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n.pt /usr/src/ultralytics/ + +# Install pip packages +RUN python3 -m pip install --upgrade pip wheel +RUN pip install --no-cache -e . + +# Creates a symbolic link to make 'python' point to 'python3' +RUN ln -sf /usr/bin/python3 /usr/bin/python + + +# Usage Examples ------------------------------------------------------------------------------------------------------- + +# Build and Push +# t=ultralytics/ultralytics:latest-arm64 && sudo docker build --platform linux/arm64 -f docker/Dockerfile-arm64 -t $t . && sudo docker push $t + +# Run +# t=ultralytics/ultralytics:latest-arm64 && sudo docker run -it --ipc=host $t + +# Pull and Run +# t=ultralytics/ultralytics:latest-arm64 && sudo docker pull $t && sudo docker run -it --ipc=host $t + +# Pull and Run with local volume mounted +# t=ultralytics/ultralytics:latest-arm64 && sudo docker pull $t && sudo docker run -it --ipc=host -v "$(pwd)"/datasets:/usr/src/datasets $t diff --git a/ultralytics/docker/Dockerfile-arm64:Zone.Identifier b/ultralytics/docker/Dockerfile-arm64:Zone.Identifier new file mode 100755 index 0000000..a45e1ac --- /dev/null +++ b/ultralytics/docker/Dockerfile-arm64:Zone.Identifier @@ -0,0 +1,2 @@ +[ZoneTransfer] +ZoneId=3 diff --git a/ultralytics/docker/Dockerfile-conda b/ultralytics/docker/Dockerfile-conda new file mode 100755 index 0000000..73d38d6 --- /dev/null +++ b/ultralytics/docker/Dockerfile-conda @@ -0,0 +1,38 @@ +# Ultralytics YOLO 🚀, AGPL-3.0 license +# Builds ultralytics/ultralytics:latest-conda image on DockerHub https://hub.docker.com/r/ultralytics/ultralytics +# Image is optimized for Ultralytics Anaconda (https://anaconda.org/conda-forge/ultralytics) installation and usage + +# Start FROM miniconda3 image https://hub.docker.com/r/continuumio/miniconda3 +FROM continuumio/miniconda3:latest + +# Downloads to user config dir +ADD https://ultralytics.com/assets/Arial.ttf https://ultralytics.com/assets/Arial.Unicode.ttf /root/.config/Ultralytics/ + +# Install linux packages +RUN apt update \ + && apt install --no-install-recommends -y libgl1 + +# Copy contents +ADD https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n.pt . + +# Install conda packages +# mkl required to fix 'OSError: libmkl_intel_lp64.so.2: cannot open shared object file: No such file or directory' +RUN conda config --set solver libmamba && \ + conda install pytorch torchvision pytorch-cuda=11.8 -c pytorch -c nvidia && \ + conda install -c conda-forge ultralytics mkl + # conda install -c pytorch -c nvidia -c conda-forge pytorch torchvision pytorch-cuda=11.8 ultralytics mkl + + +# Usage Examples ------------------------------------------------------------------------------------------------------- + +# Build and Push +# t=ultralytics/ultralytics:latest-conda && sudo docker build -f docker/Dockerfile-cpu -t $t . && sudo docker push $t + +# Run +# t=ultralytics/ultralytics:latest-conda && sudo docker run -it --ipc=host $t + +# Pull and Run +# t=ultralytics/ultralytics:latest-conda && sudo docker pull $t && sudo docker run -it --ipc=host $t + +# Pull and Run with local volume mounted +# t=ultralytics/ultralytics:latest-conda && sudo docker pull $t && sudo docker run -it --ipc=host -v "$(pwd)"/datasets:/usr/src/datasets $t diff --git a/ultralytics/docker/Dockerfile-conda:Zone.Identifier b/ultralytics/docker/Dockerfile-conda:Zone.Identifier new file mode 100755 index 0000000..a45e1ac --- /dev/null +++ b/ultralytics/docker/Dockerfile-conda:Zone.Identifier @@ -0,0 +1,2 @@ +[ZoneTransfer] +ZoneId=3 diff --git a/ultralytics/docker/Dockerfile-cpu b/ultralytics/docker/Dockerfile-cpu new file mode 100755 index 0000000..42e5ec3 --- /dev/null +++ b/ultralytics/docker/Dockerfile-cpu @@ -0,0 +1,55 @@ +# Ultralytics YOLO 🚀, AGPL-3.0 license +# Builds ultralytics/ultralytics:latest-cpu image on DockerHub https://hub.docker.com/r/ultralytics/ultralytics +# Image is CPU-optimized for ONNX, OpenVINO and PyTorch YOLOv8 deployments + +# Start FROM Ubuntu image https://hub.docker.com/_/ubuntu +FROM ubuntu:23.10 + +# Downloads to user config dir +ADD https://ultralytics.com/assets/Arial.ttf https://ultralytics.com/assets/Arial.Unicode.ttf /root/.config/Ultralytics/ + +# Install linux packages +# g++ required to build 'tflite_support' and 'lap' packages, libusb-1.0-0 required for 'tflite_support' package +RUN apt update \ + && apt install --no-install-recommends -y python3-pip git zip curl htop libgl1 libglib2.0-0 libpython3-dev gnupg g++ libusb-1.0-0 + +# Create working directory +WORKDIR /usr/src/ultralytics + +# Copy contents +# COPY . /usr/src/ultralytics # git permission issues inside container +RUN git clone https://github.com/ultralytics/ultralytics -b main /usr/src/ultralytics +ADD https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n.pt /usr/src/ultralytics/ + +# Remove python3.11/EXTERNALLY-MANAGED or use 'pip install --break-system-packages' avoid 'externally-managed-environment' Ubuntu nightly error +RUN rm -rf /usr/lib/python3.11/EXTERNALLY-MANAGED + +# Install pip packages +RUN python3 -m pip install --upgrade pip wheel +RUN pip install --no-cache -e ".[export]" --extra-index-url https://download.pytorch.org/whl/cpu + +# Run exports to AutoInstall packages +RUN yolo export model=tmp/yolov8n.pt format=edgetpu imgsz=32 +RUN yolo export model=tmp/yolov8n.pt format=ncnn imgsz=32 +# Requires <= Python 3.10, bug with paddlepaddle==2.5.0 https://github.com/PaddlePaddle/X2Paddle/issues/991 +# RUN pip install --no-cache paddlepaddle==2.4.2 x2paddle +# Remove exported models +RUN rm -rf tmp + +# Creates a symbolic link to make 'python' point to 'python3' +RUN ln -sf /usr/bin/python3 /usr/bin/python + + +# Usage Examples ------------------------------------------------------------------------------------------------------- + +# Build and Push +# t=ultralytics/ultralytics:latest-cpu && sudo docker build -f docker/Dockerfile-cpu -t $t . && sudo docker push $t + +# Run +# t=ultralytics/ultralytics:latest-cpu && sudo docker run -it --ipc=host $t + +# Pull and Run +# t=ultralytics/ultralytics:latest-cpu && sudo docker pull $t && sudo docker run -it --ipc=host $t + +# Pull and Run with local volume mounted +# t=ultralytics/ultralytics:latest-cpu && sudo docker pull $t && sudo docker run -it --ipc=host -v "$(pwd)"/datasets:/usr/src/datasets $t diff --git a/ultralytics/docker/Dockerfile-cpu:Zone.Identifier b/ultralytics/docker/Dockerfile-cpu:Zone.Identifier new file mode 100755 index 0000000..a45e1ac --- /dev/null +++ b/ultralytics/docker/Dockerfile-cpu:Zone.Identifier @@ -0,0 +1,2 @@ +[ZoneTransfer] +ZoneId=3 diff --git a/ultralytics/docker/Dockerfile-jetson b/ultralytics/docker/Dockerfile-jetson new file mode 100755 index 0000000..c177b8e --- /dev/null +++ b/ultralytics/docker/Dockerfile-jetson @@ -0,0 +1,48 @@ +# Ultralytics YOLO 🚀, AGPL-3.0 license +# Builds ultralytics/ultralytics:jetson image on DockerHub https://hub.docker.com/r/ultralytics/ultralytics +# Supports JetPack for YOLOv8 on Jetson Nano, TX1/TX2, Xavier NX, AGX Xavier, AGX Orin, and Orin NX + +# Start FROM https://catalog.ngc.nvidia.com/orgs/nvidia/containers/l4t-pytorch +FROM nvcr.io/nvidia/l4t-pytorch:r35.2.1-pth2.0-py3 + +# Downloads to user config dir +ADD https://ultralytics.com/assets/Arial.ttf https://ultralytics.com/assets/Arial.Unicode.ttf /root/.config/Ultralytics/ + +# Install linux packages +# g++ required to build 'tflite_support' and 'lap' packages, libusb-1.0-0 required for 'tflite_support' package +RUN apt update \ + && apt install --no-install-recommends -y gcc git zip curl htop libgl1 libglib2.0-0 libpython3-dev gnupg g++ libusb-1.0-0 + +# Create working directory +WORKDIR /usr/src/ultralytics + +# Copy contents +# COPY . /usr/src/ultralytics # git permission issues inside container +RUN git clone https://github.com/ultralytics/ultralytics -b main /usr/src/ultralytics +ADD https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n.pt /usr/src/ultralytics/ + +# Remove opencv-python from requirements.txt as it conflicts with opencv-python installed in base image +RUN grep -v '^opencv-python' requirements.txt > tmp.txt && mv tmp.txt requirements.txt + +# Install pip packages manually for TensorRT compatibility https://github.com/NVIDIA/TensorRT/issues/2567 +RUN python3 -m pip install --upgrade pip wheel +RUN pip install --no-cache tqdm matplotlib pyyaml psutil pandas onnx "numpy==1.23" +RUN pip install --no-cache -e . + +# Set environment variables +ENV OMP_NUM_THREADS=1 + + +# Usage Examples ------------------------------------------------------------------------------------------------------- + +# Build and Push +# t=ultralytics/ultralytics:latest-jetson && sudo docker build --platform linux/arm64 -f docker/Dockerfile-jetson -t $t . && sudo docker push $t + +# Run +# t=ultralytics/ultralytics:latest-jetson && sudo docker run -it --ipc=host $t + +# Pull and Run +# t=ultralytics/ultralytics:latest-jetson && sudo docker pull $t && sudo docker run -it --ipc=host $t + +# Pull and Run with NVIDIA runtime +# t=ultralytics/ultralytics:latest-jetson && sudo docker pull $t && sudo docker run -it --ipc=host --runtime=nvidia $t diff --git a/ultralytics/docker/Dockerfile-jetson:Zone.Identifier b/ultralytics/docker/Dockerfile-jetson:Zone.Identifier new file mode 100755 index 0000000..a45e1ac --- /dev/null +++ b/ultralytics/docker/Dockerfile-jetson:Zone.Identifier @@ -0,0 +1,2 @@ +[ZoneTransfer] +ZoneId=3 diff --git a/ultralytics/docker/Dockerfile-python b/ultralytics/docker/Dockerfile-python new file mode 100755 index 0000000..b227fa6 --- /dev/null +++ b/ultralytics/docker/Dockerfile-python @@ -0,0 +1,52 @@ +# Ultralytics YOLO 🚀, AGPL-3.0 license +# Builds ultralytics/ultralytics:latest-cpu image on DockerHub https://hub.docker.com/r/ultralytics/ultralytics +# Image is CPU-optimized for ONNX, OpenVINO and PyTorch YOLOv8 deployments + +# Use the official Python 3.10 slim-bookworm as base image +FROM python:3.10-slim-bookworm + +# Downloads to user config dir +ADD https://ultralytics.com/assets/Arial.ttf https://ultralytics.com/assets/Arial.Unicode.ttf /root/.config/Ultralytics/ + +# Install linux packages +# g++ required to build 'tflite_support' and 'lap' packages, libusb-1.0-0 required for 'tflite_support' package +RUN apt update \ + && apt install --no-install-recommends -y python3-pip git zip curl htop libgl1 libglib2.0-0 libpython3-dev gnupg g++ libusb-1.0-0 + +# Create working directory +WORKDIR /usr/src/ultralytics + +# Copy contents +# COPY . /usr/src/ultralytics # git permission issues inside container +RUN git clone https://github.com/ultralytics/ultralytics -b main /usr/src/ultralytics +ADD https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n.pt /usr/src/ultralytics/ + +# Remove python3.11/EXTERNALLY-MANAGED or use 'pip install --break-system-packages' avoid 'externally-managed-environment' Ubuntu nightly error +# RUN rm -rf /usr/lib/python3.11/EXTERNALLY-MANAGED + +# Install pip packages +RUN python3 -m pip install --upgrade pip wheel +RUN pip install --no-cache -e ".[export]" --extra-index-url https://download.pytorch.org/whl/cpu + +# Run exports to AutoInstall packages +RUN yolo export model=tmp/yolov8n.pt format=edgetpu imgsz=32 +RUN yolo export model=tmp/yolov8n.pt format=ncnn imgsz=32 +# Requires <= Python 3.10, bug with paddlepaddle==2.5.0 https://github.com/PaddlePaddle/X2Paddle/issues/991 +RUN pip install --no-cache paddlepaddle==2.4.2 x2paddle +# Remove exported models +RUN rm -rf tmp + + +# Usage Examples ------------------------------------------------------------------------------------------------------- + +# Build and Push +# t=ultralytics/ultralytics:latest-python && sudo docker build -f docker/Dockerfile-python -t $t . && sudo docker push $t + +# Run +# t=ultralytics/ultralytics:latest-python && sudo docker run -it --ipc=host $t + +# Pull and Run +# t=ultralytics/ultralytics:latest-python && sudo docker pull $t && sudo docker run -it --ipc=host $t + +# Pull and Run with local volume mounted +# t=ultralytics/ultralytics:latest-python && sudo docker pull $t && sudo docker run -it --ipc=host -v "$(pwd)"/datasets:/usr/src/datasets $t diff --git a/ultralytics/docker/Dockerfile-python:Zone.Identifier b/ultralytics/docker/Dockerfile-python:Zone.Identifier new file mode 100755 index 0000000..a45e1ac --- /dev/null +++ b/ultralytics/docker/Dockerfile-python:Zone.Identifier @@ -0,0 +1,2 @@ +[ZoneTransfer] +ZoneId=3 diff --git a/ultralytics/docker/Dockerfile-runner b/ultralytics/docker/Dockerfile-runner new file mode 100755 index 0000000..c0f8659 --- /dev/null +++ b/ultralytics/docker/Dockerfile-runner @@ -0,0 +1,38 @@ +# Ultralytics YOLO 🚀, AGPL-3.0 license +# Builds GitHub actions CI runner image for deployment to DockerHub https://hub.docker.com/r/ultralytics/ultralytics +# Image is CUDA-optimized for YOLOv8 single/multi-GPU training and inference tests + +# Start FROM Ultralytics GPU image +FROM ultralytics/ultralytics:latest + +# Set the working directory +WORKDIR /actions-runner + +# Download and unpack the latest runner from https://github.com/actions/runner +RUN FILENAME=actions-runner-linux-x64-2.309.0.tar.gz && \ + curl -o $FILENAME -L https://github.com/actions/runner/releases/download/v2.309.0/$FILENAME && \ + tar xzf $FILENAME && \ + rm $FILENAME + +# Install runner dependencies +ENV RUNNER_ALLOW_RUNASROOT=1 +ENV DEBIAN_FRONTEND=noninteractive +RUN ./bin/installdependencies.sh && \ + apt-get -y install libicu-dev + +# Inline ENTRYPOINT command to configure and start runner with default TOKEN and NAME +ENTRYPOINT sh -c './config.sh --url https://github.com/ultralytics/ultralytics \ + --token ${GITHUB_RUNNER_TOKEN:-TOKEN} \ + --name ${GITHUB_RUNNER_NAME:-NAME} \ + --labels gpu-latest \ + --replace && \ + ./run.sh' + + +# Usage Examples ------------------------------------------------------------------------------------------------------- + +# Build and Push +# t=ultralytics/ultralytics:latest-runner && sudo docker build -f docker/Dockerfile-runner -t $t . && sudo docker push $t + +# Pull and Run in detached mode with access to GPUs 0 and 1 +# t=ultralytics/ultralytics:latest-runner && sudo docker run -d -e GITHUB_RUNNER_TOKEN=TOKEN -e GITHUB_RUNNER_NAME=NAME --ipc=host --gpus '"device=0,1"' $t diff --git a/ultralytics/docker/Dockerfile-runner:Zone.Identifier b/ultralytics/docker/Dockerfile-runner:Zone.Identifier new file mode 100755 index 0000000..a45e1ac --- /dev/null +++ b/ultralytics/docker/Dockerfile-runner:Zone.Identifier @@ -0,0 +1,2 @@ +[ZoneTransfer] +ZoneId=3 diff --git a/ultralytics/docker/Dockerfile:Zone.Identifier b/ultralytics/docker/Dockerfile:Zone.Identifier new file mode 100755 index 0000000..a45e1ac --- /dev/null +++ b/ultralytics/docker/Dockerfile:Zone.Identifier @@ -0,0 +1,2 @@ +[ZoneTransfer] +ZoneId=3 diff --git a/ultralytics/docs/README.md b/ultralytics/docs/README.md new file mode 100755 index 0000000..a5da59e --- /dev/null +++ b/ultralytics/docs/README.md @@ -0,0 +1,102 @@ +# Ultralytics Docs + +Ultralytics Docs are deployed to [https://docs.ultralytics.com](https://docs.ultralytics.com). + +[](https://github.com/ultralytics/docs/actions/workflows/pages/pages-build-deployment) [](https://github.com/ultralytics/docs/actions/workflows/links.yml) + +## Install Ultralytics package + +[](https://badge.fury.io/py/ultralytics) [](https://pepy.tech/project/ultralytics) + +To install the ultralytics package in developer mode, you will need to have Git and Python 3 installed on your system. Then, follow these steps: + +1. Clone the ultralytics repository to your local machine using Git: + + ```bash + git clone https://github.com/ultralytics/ultralytics.git + ``` + +2. Navigate to the root directory of the repository: + + ```bash + cd ultralytics + ``` + +3. Install the package in developer mode using pip: + + ```bash + pip install -e '.[dev]' + ``` + +This will install the ultralytics package and its dependencies in developer mode, allowing you to make changes to the package code and have them reflected immediately in your Python environment. + +Note that you may need to use the pip3 command instead of pip if you have multiple versions of Python installed on your system. + +## Building and Serving Locally + +The `mkdocs serve` command is used to build and serve a local version of the MkDocs documentation site. It is typically used during the development and testing phase of a documentation project. + +```bash +mkdocs serve +``` + +Here is a breakdown of what this command does: + +- `mkdocs`: This is the command-line interface (CLI) for the MkDocs static site generator. It is used to build and serve MkDocs sites. +- `serve`: This is a subcommand of the `mkdocs` CLI that tells it to build and serve the documentation site locally. +- `-a`: This flag specifies the hostname and port number to bind the server to. The default value is `localhost:8000`. +- `-t`: This flag specifies the theme to use for the documentation site. The default value is `mkdocs`. +- `-s`: This flag tells the `serve` command to serve the site in silent mode, which means it will not display any log messages or progress updates. When you run the `mkdocs serve` command, it will build the documentation site using the files in the `docs/` directory and serve it at the specified hostname and port number. You can then view the site by going to the URL in your web browser. + +While the site is being served, you can make changes to the documentation files and see them reflected in the live site immediately. This is useful for testing and debugging your documentation before deploying it to a live server. + +To stop the serve command and terminate the local server, you can use the `CTRL+C` keyboard shortcut. + +## Building and Serving Multi-Language + +For multi-language MkDocs sites use the following additional steps: + +1. Add all new language *.md files to git commit: `git add docs/**/*.md -f` +2. Build all languages to the `/site` directory. Verify that the top-level `/site` directory contains `CNAME`, `robots.txt` and `sitemap.xml` files, if applicable. + + ```bash + # Remove existing /site directory + rm -rf site + + # Loop through all *.yml files in the docs directory + mkdocs build -f docs/mkdocs.yml + for file in docs/mkdocs_*.yml; do + echo "Building MkDocs site with configuration file: $file" + mkdocs build -f "$file" + done + ``` + +3. Preview in web browser with: + + ```bash + cd site + python -m http.server + open http://localhost:8000 # on macOS + ``` + +Note the above steps are combined into the Ultralytics [build_docs.py](https://github.com/ultralytics/ultralytics/blob/main/docs/build_docs.py) script. + +## Deploying Your Documentation Site + +To deploy your MkDocs documentation site, you will need to choose a hosting provider and a deployment method. Some popular options include GitHub Pages, GitLab Pages, and Amazon S3. + +Before you can deploy your site, you will need to configure your `mkdocs.yml` file to specify the remote host and any other necessary deployment settings. + +Once you have configured your `mkdocs.yml` file, you can use the `mkdocs deploy` command to build and deploy your site. This command will build the documentation site using the files in the `docs/` directory and the specified configuration file and theme, and then deploy the site to the specified remote host. + +For example, to deploy your site to GitHub Pages using the gh-deploy plugin, you can use the following command: + +```bash +mkdocs gh-deploy +``` + +If you are using GitHub Pages, you can set a custom domain for your documentation site by going to the "Settings" page for your repository and updating the "Custom domain" field in the "GitHub Pages" section. + + + +For more information on deploying your MkDocs documentation site, see the [MkDocs documentation](https://www.mkdocs.org/user-guide/deploying-your-docs/). diff --git a/ultralytics/docs/README.md:Zone.Identifier b/ultralytics/docs/README.md:Zone.Identifier new file mode 100755 index 0000000..a45e1ac --- /dev/null +++ b/ultralytics/docs/README.md:Zone.Identifier @@ -0,0 +1,2 @@ +[ZoneTransfer] +ZoneId=3 diff --git a/ultralytics/docs/ar/index.md b/ultralytics/docs/ar/index.md new file mode 100755 index 0000000..ab95a6a --- /dev/null +++ b/ultralytics/docs/ar/index.md @@ -0,0 +1,83 @@ +--- +comments: true +description: استكشف دليل كامل لـ Ultralytics YOLOv8 ، نموذج كشف الكائنات وتجزئة الصور ذو السرعة العالية والدقة العالية. تثبيت المحررة ، والتنبؤ ، والتدريب والمزيد. +keywords: Ultralytics، YOLOv8، كشف الكائنات، تجزئة الصور، التعلم الآلي، التعلم العميق، الرؤية الحاسوبية، YOLOv8 installation، YOLOv8 prediction، YOLOv8 training، تاريخ YOLO، تراخيص YOLO +--- + + + +يتم تقديم [Ultralytics](https://ultralytics.com) [YOLOv8](https://github.com/ultralytics/ultralytics) ، أحدث إصدار من نموذج كشف الكائنات وتجزئة الصور المشهورة للوقت الفعلي. يعتمد YOLOv8 على التطورات المتقدمة في التعلم العميق والرؤية الحاسوبية ، ويقدم أداءً فائقًا من حيث السرعة والدقة. يجعل التصميم البسيط له مناسبًا لمختلف التطبيقات وقابلًا للتكيف بسهولة مع منصات الأجهزة المختلفة ، من الأجهزة الحافة إلى واجهات برمجة التطبيقات في السحابة. + +استكشف أدلة YOLOv8 ، وهي مورد شامل يهدف إلى مساعدتك في فهم واستخدام ميزاته وقدراته. سواء كنت ممارسًا في مجال التعلم الآلي من ذوي الخبرة أو جديدًا في هذا المجال ، فإن الهدف من هذا المركز هو تحقيق الحد الأقصى لإمكانات YOLOv8 في مشاريعك. + +!!! Note "ملاحظة" + + 🚧 تم تطوير وثائقنا متعددة اللغات حاليًا ، ونعمل بجد لتحسينها. شكراً لصبرك! 🙏 + +## من أين أبدأ + +- **تثبيت** `ultralytics` بواسطة pip والبدء في العمل في دقائق [:material-clock-fast: ابدأ الآن](quickstart.md){ .md-button } +- **توقع** الصور ومقاطع الفيديو الجديدة بواسطة YOLOv8 [:octicons-image-16: توقع على الصور](modes/predict.md){ .md-button } +- **تدريب** نموذج YOLOv8 الجديد على مجموعة البيانات المخصصة الخاصة بك [:fontawesome-solid-brain: قم بتدريب نموذج](modes/train.md){ .md-button } +- **استكشاف** مهام YOLOv8 مثل التجزئة والتصنيف والوضع والتتبع [:material-magnify-expand: استكشاف المهام](tasks/index.md){ .md-button } + +

+

+

+

+ مشاهدة: كيفية تدريب نموذج YOLOv8 على مجموعة بيانات مخصصة في جوجل كولاب.

+

+

+

+

+ شاهد: تشغيل نماذج YOLO من Ultralytics في بضعة أسطر من الكود فقط.

+

(بكسل) | mAPval

50-95 | سرعة

معالج الجهاز ONNX

(مللي ثانية) | سرعة

حويصلة A100 TensorRT

(مللي ثانية) | المعلمات

(مليون) | FLOPs

(بليون) | + |---------------------------------------------------------------------------------------------|----------------------------------------------------------------------------------------------------------------|-----------------------|----------------------|--------------------------------|-------------------------------------|--------------------|-------------------| + | [yolov5nu.pt](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov5nu.pt) | [yolov5n.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5.yaml) | 640 | 34.3 | 73.6 | 1.06 | 2.6 | 7.7 | + | [yolov5su.pt](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov5su.pt) | [yolov5s.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5.yaml) | 640 | 43.0 | 120.7 | 1.27 | 9.1 | 24.0 | + | [yolov5mu.pt](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov5mu.pt) | [yolov5m.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5.yaml) | 640 | 49.0 | 233.9 | 1.86 | 25.1 | 64.2 | + | [yolov5lu.pt](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov5lu.pt) | [yolov5l.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5.yaml) | 640 | 52.2 | 408.4 | 2.50 | 53.2 | 135.0 | + | [yolov5xu.pt](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov5xu.pt) | [yolov5x.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5.yaml) | 640 | 53.2 | 763.2 | 3.81 | 97.2 | 246.4 | + | | | | | | | | | + | [yolov5n6u.pt](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov5n6u.pt) | [yolov5n6.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5-p6.yaml) | 1280 | 42.1 | 211.0 | 1.83 | 4.3 | 7.8 | + | [yolov5s6u.pt](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov5s6u.pt) | [yolov5s6.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5-p6.yaml) | 1280 | 48.6 | 422.6 | 2.34 | 15.3 | 24.6 | + | [yolov5m6u.pt](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov5m6u.pt) | [yolov5m6.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5-p6.yaml) | 1280 | 53.6 | 810.9 | 4.36 | 41.2 | 65.7 | + | [yolov5l6u.pt](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov5l6u.pt) | [yolov5l6.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5-p6.yaml) | 1280 | 55.7 | 1470.9 | 5.47 | 86.1 | 137.4 | + | [yolov5x6u.pt](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov5x6u.pt) | [yolov5x6.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5-p6.yaml) | 1280 | 56.8 | 2436.5 | 8.98 | 155.4 | 250.7 | + +## أمثلة للاستخدام + +يقدم هذا المثال أمثلة بسيطة للغاية للتدريب والتشخيص باستخدام YOLOv5. يُمكن إنشاء نموذج مثيل في البرمجة باستخدام نماذج PyTorch المدربة مسبقًا في صيغة `*.pt` وملفات التكوين `*.yaml`: + +```python +from ultralytics import YOLO + +#قم بتحميل نموذج YOLOv5n المدرب مسبقًا على مجموعة بيانات COCO +model = YOLO('yolov5n.pt') + +# قم بعرض معلومات النموذج (اختياري) +model.info() + +# قم بتدريب النموذج على مجموعة البيانات COCO8 لمدة 100 دورة +results = model.train(data='coco8.yaml', epochs=100, imgsz=640) + +# قم بتشغيل التشخيص بنموذج YOLOv5n على صورة 'bus.jpg' +results = model('path/to/bus.jpg') +``` + +=== "سطر الأوامر" + + يتاح سطر الأوامر لتشغيل النماذج مباشرة: + + ```bash + # قم بتحميل نموذج YOLOv5n المدرب مسبقًا على مجموعة بيانات COCO8 وقم بتدريبه لمدة 100 دورة + yolo train model=yolov5n.pt data=coco8.yaml epochs=100 imgsz=640 + + # قم بتحميل نموذج YOLOv5n المدرب مسبقًا على مجموعة بيانات COCO8 وتشغيل حالة التشخيص على صورة 'bus.jpg' + yolo predict model=yolov5n.pt source=path/to/bus.jpg + ``` + +## الاستشهادات والتقدير + +إذا قمت باستخدام YOLOv5 أو YOLOv5u في بحثك، يرجى استشهاد نموذج Ultralytics YOLOv5 بطريقة الاقتباس التالية: + +!!! Quote "" + + === "BibTeX" + ```bibtex + @software{yolov5, + title = {Ultralytics YOLOv5}, + author = {Glenn Jocher}, + year = {2020}, + version = {7.0}, + license = {AGPL-3.0}, + url = {https://github.com/ultralytics/yolov5}, + doi = {10.5281/zenodo.3908559}, + orcid = {0000-0001-5950-6979} + } + ``` + +يرجى ملاحظة أن نماذج YOLOv5 متاحة بترخيص [AGPL-3.0](https://github.com/ultralytics/ultralytics/blob/main/LICENSE) و[Enterprise](https://ultralytics.com/license). diff --git a/ultralytics/docs/ar/models/yolov5.md:Zone.Identifier b/ultralytics/docs/ar/models/yolov5.md:Zone.Identifier new file mode 100755 index 0000000..a45e1ac --- /dev/null +++ b/ultralytics/docs/ar/models/yolov5.md:Zone.Identifier @@ -0,0 +1,2 @@ +[ZoneTransfer] +ZoneId=3 diff --git a/ultralytics/docs/ar/models/yolov6.md b/ultralytics/docs/ar/models/yolov6.md new file mode 100755 index 0000000..12dd557 --- /dev/null +++ b/ultralytics/docs/ar/models/yolov6.md @@ -0,0 +1,107 @@ +--- +comments: true +description: استكشف نموذج Meituan YOLOv6 للكشف عن الكائنات الحديثة، والذي يوفر توازنًا مذهلاً بين السرعة والدقة، مما يجعله الخيار الأمثل لتطبيقات الوقت الحقيقي. تعرّف على الميزات والنماذج المُدربة مسبقًا واستخدام Python. +keywords: Meituan YOLOv6، الكشف عن الكائنات، Ultralytics، YOLOv6 docs، Bi-directional Concatenation، تدريب بمساعدة العناصر، النماذج المدربة مسبقا، تطبيقات الوقت الحقيقي +--- + +# Meituan YOLOv6 + +## نظرة عامة + +[Meituan](https://about.meituan.com/) YOLOv6 هو منظّف الكائنات الحديثة الحديثة الذي يُقدم توازنًا ملحوظًا بين السرعة والدقة، مما يجعله خيارًا شائعًا لتطبيقات الوقت الحقيقي. يُقدم هذا النموذج العديد من التحسينات الملحوظة في بنيته ونظام التدريب، بما في ذلك تطبيق وحدة Bi-directional Concatenation (BiC)، واستراتيجية AAT (anchor-aided training) التي تعتمد على العناصر، وتصميم محسّن للأساس والرقبة لتحقيق أداء على مجموعة بيانات COCO يفوق جميع النماذج الأخرى. + + + +**نظرة عامة على YOLOv6.** مخطط بنية النموذج يوضح المكونات المعاد تصميمها واستراتيجيات التدريب التي أدت إلى تحسينات أداء كبيرة. (أ) الرقبة الخاصة بـ YOLOv6 (N و S معروضان). لاحظ أنه بالنسبة لم/n، يتم استبدال RepBlocks بـ CSPStackRep. (ب) هيكل وحدة BiC. (ج) مكون SimCSPSPPF. ([المصدر](https://arxiv.org/pdf/2301.05586.pdf)). + +### ميزات رئيسية + +- **وحدة Bi-directional Concatenation (BiC):** يقدم YOLOv6 وحدة BiC في الرقبة التابعة للكاشف، مما يعزز إشارات التحديد المحلية ويؤدي إلى زيادة الأداء دون التأثير على السرعة. +- **استراتيجية التدريب بمساعدة العناصر (AAT):** يقدم هذا النموذج استراتيجية AAT للاستفادة من فوائد النماذج المستندة إلى العناصر وغير المستندة إليها دون التضحية في كفاءة الاستدلال. +- **تصميم أساس ورقبة محسّن:** من خلال تعميق YOLOv6 لتشمل مرحلة أخرى في الأساس والرقبة، يحقق هذا النموذج أداءً يفوق جميع النماذج الأخرى على مجموعة بيانات COCO لإدخال عالي الدقة. +- **استراتيجية الاستنباط الذاتي:** يتم تنفيذ استراتيجية استنتاج ذاتي جديدة لتعزيز أداء النماذج الصغيرة من YOLOv6، وذلك عن طريق تعزيز فرع الانحدار المساعد خلال التدريب وإزالته في الاستنتاج لتجنب انخفاض السرعة الواضح. + +## معايير الأداء + +يوفر YOLOv6 مجموعة متنوعة من النماذج المدرّبة مسبقًا بمقاييس مختلفة: + +- YOLOv6-N: ٣٧.٥٪ AP في COCO val2017 عندما يتم استخدام بطاقة NVIDIA Tesla T4 GPU وسرعة ١١٨٧ إطار في الثانية. +- YOLOv6-S: ٤٥.٠٪ AP وسرعة ٤٨٤ إطار في الثانية. +- YOLOv6-M: ٥٠.٠٪ AP وسرعة ٢٢٦ إطار في الثانية. +- YOLOv6-L: ٥٢.٨٪ AP وسرعة ١١٦ إطار في الثانية. +- YOLOv6-L6: دقة حديثة في الزمن الحقيقي. + +كما يوفر YOLOv6 نماذج مؤنقة (quantized models) بدقات مختلفة ونماذج محسنة للمنصات المحمولة. + +## أمثلة عن الاستخدام + +يقدم هذا المثال أمثلة بسيطة لتدريب YOLOv6 واستنتاجه. للحصول على وثائق كاملة حول هذه وأوضاع أخرى [انظر](../modes/index.md) الى الصفحات التوضيحية لتوسعة الوثائق الفائقة ، [توقع](../modes/predict.md) ، [تدريب](../modes/train.md) ، [التحقق](../modes/val.md) و [التصدير](../modes/export.md). + +!!! Example "مثال" + + === "Python" + + يمكن تمرير النماذج المدرّبة مسبقًا بتنسيق `*.pt` في PyTorch وملفات التكوين `*.yaml` لفئة `YOLO()` لإنشاء نموذج في Python: + + ```python + from ultralytics import YOLO + + # إنشاء نموذج YOLOv6n من البداية + model = YOLO('yolov6n.yaml') + + # عرض معلومات النموذج (اختياري) + model.info() + + # تدريب النموذج على مجموعة بيانات مثال COCO8 لمدة 100 دورة تدريب + results = model.train(data='coco8.yaml', epochs=100, imgsz=640) + + # تشغيل الاستنتاج بنموذج YOLOv6n على صورة 'bus.jpg' + results = model('path/to/bus.jpg') + ``` + + === "CLI" + + يمكن استخدام أوامر CLI لتشغيل النماذج مباشرةً: + + ```bash + # إنشاء نموذج YOLOv6n من البداية وتدريبه باستخدام مجموعة بيانات مثال COCO8 لمدة 100 دورة تدريب + yolo train model=yolov6n.yaml data=coco8.yaml epochs=100 imgsz=640 + + # إنشاء نموذج YOLOv6n من البداية وتشغيل الاستنتاج على صورة 'bus.jpg' + yolo predict model=yolov6n.yaml source=path/to/bus.jpg + ``` + +## المهام والأوضاع المدعومة + +تقدم سلسلة YOLOv6 مجموعة من النماذج، والتي تم تحسينها للكشف عن الكائنات عالي الأداء. تلبي هذه النماذج احتياجات الكمبيوتيشن المتنوعة ومتطلبات الدقة، مما يجعلها متعددة الاستخدامات في مجموعة واسعة من التطبيقات. + +| نوع النموذج | الأوزان المدربة مسبقًا | المهام المدعومة | الاستنتاج | التحقق | التدريب | التصدير | +|-------------|------------------------|-----------------------------------------|-----------|--------|---------|---------| +| YOLOv6-N | `yolov6-n.pt` | [الكشف عن الكائنات](../tasks/detect.md) | ✅ | ✅ | ✅ | ✅ | +| YOLOv6-S | `yolov6-s.pt` | [الكشف عن الكائنات](../tasks/detect.md) | ✅ | ✅ | ✅ | ✅ | +| YOLOv6-M | `yolov6-m.pt` | [الكشف عن الكائنات](../tasks/detect.md) | ✅ | ✅ | ✅ | ✅ | +| YOLOv6-L | `yolov6-l.pt` | [الكشف عن الكائنات](../tasks/detect.md) | ✅ | ✅ | ✅ | ✅ | +| YOLOv6-L6 | `yolov6-l6.pt` | [الكشف عن الكائنات](../tasks/detect.md) | ✅ | ✅ | ✅ | ✅ | + +توفر هذه الجدول نظرة عامة مفصلة على النماذج المختلفة لـ YOLOv6، مع تسليط الضوء على قدراتها في مهام الكشف عن الكائنات وتوافقها مع الأوضاع التشغيلية المختلفة مثل [الاستنتاج](../modes/predict.md) و [التحقق](../modes/val.md) و [التدريب](../modes/train.md) و [التصدير](../modes/export.md). هذا الدعم الشامل يضمن أن يمكن للمستخدمين الاستفادة الكاملة من قدرات نماذج YOLOv6 في مجموعة واسعة من سيناريوهات الكشف عن الكائنات. + +## الاقتباسات والتقديرات + +نحن نود أن نقدّم الشكر للمؤلفين على مساهماتهم الهامة في مجال كشف الكائنات في الوقت الحقيقي: + +!!! Quote "" + + === "BibTeX" + + ```bibtex + @misc{li2023yolov6, + title={YOLOv6 v3.0: A Full-Scale Reloading}, + author={Chuyi Li and Lulu Li and Yifei Geng and Hongliang Jiang and Meng Cheng and Bo Zhang and Zaidan Ke and Xiaoming Xu and Xiangxiang Chu}, + year={2023}, + eprint={2301.05586}, + archivePrefix={arXiv}, + primaryClass={cs.CV} + } + ``` + +يمكن العثور على الورقة الأصلية لـ YOLOv6 على [arXiv](https://arxiv.org/abs/2301.05586). نشر المؤلفون عملهم بشكل عام، ويمكن الوصول إلى الشيفرة المصدرية على [GitHub](https://github.com/meituan/YOLOv6). نحن نقدّر جهودهم في تطوير هذا المجال وجعل عملهم متاحًا للمجتمع بأسره. diff --git a/ultralytics/docs/ar/models/yolov6.md:Zone.Identifier b/ultralytics/docs/ar/models/yolov6.md:Zone.Identifier new file mode 100755 index 0000000..a45e1ac --- /dev/null +++ b/ultralytics/docs/ar/models/yolov6.md:Zone.Identifier @@ -0,0 +1,2 @@ +[ZoneTransfer] +ZoneId=3 diff --git a/ultralytics/docs/ar/models/yolov7.md b/ultralytics/docs/ar/models/yolov7.md new file mode 100755 index 0000000..1cdcc63 --- /dev/null +++ b/ultralytics/docs/ar/models/yolov7.md @@ -0,0 +1,66 @@ +--- +comments: true +description: استكشف YOLOv7 ، جهاز كشف الكائنات في الوقت الحقيقي. تعرف على سرعته الفائقة، ودقته المذهلة، وتركيزه الفريد على تحسين الأمتعة التدريبية تدريبياً. +keywords: YOLOv7، كاشف الكائنات في الوقت الحقيقي، الحالة الفنية، Ultralytics، مجموعة بيانات MS COCO، المعيار المعاد تعريفه للنموذج، التسمية الديناميكية، التحجيم الموسع، التحجيم المركب +--- + +# YOLOv7: حقيبة مجانية قابلة للتدريب + +YOLOv7 هو كاشف الكائنات في الوقت الحقيقي الحديث الحالي الذي يتفوق على جميع كاشفات الكائنات المعروفة من حيث السرعة والدقة في النطاق من 5 إطارات في الثانية إلى 160 إطارًا في الثانية. إنه يتمتع بأعلى دقة (٥٦.٨٪ AP) بين جميع كاشفات الكائنات الحالية في الوقت الحقيقي بسرعة ٣٠ إطارًا في الثانية أو أعلى على GPU V100. علاوة على ذلك, يتفوق YOLOv7 على كاشفات الكائنات الأخرى مثل YOLOR, YOLOX, Scaled-YOLOv4, YOLOv5 والعديد من الآخرين من حيث السرعة والدقة. النموذج مدرب على مجموعة بيانات MS COCO من البداية دون استخدام أي مجموعات بيانات أخرى أو وزن مُعين مُسبقًا. رمز المصدر لـ YOLOv7 متاح على GitHub. + + + +**مقارنة بين كاشفات الكائنات الأعلى الفنية.** من النتائج في الجدول 2 نتعرف على أن الطريقة المقترحة لديها أفضل توازن بين السرعة والدقة بشكل شامل. إذا قارنا بين YOLOv7-tiny-SiLU و YOLOv5-N (r6.1) ، يكون الطريقة الحالية أسرع بـ ١٢٧ إطارًا في الثانية وأكثر دقة بنسبة ١٠.٧٪ من حيث AP. بالإضافة إلى ذلك ، YOLOv7 لديها AP بنسبة ٥١.٤٪ في معدل إطار ١٦١ في الثانية ، في حين يكون لـ PPYOLOE-L نفس AP فقط بمعدل إطار ٧٨ في الثانية. من حيث استخدام العوامل ، يكون YOLOv7 أقل بنسبة ٤١٪ من العوامل مقارنةً بـ PPYOLOE-L. إذا قارنا YOLOv7-X بسرعة تواصل بيانات ١١٤ إطارًا في الثانية مع YOLOv5-L (r6.1) مع سرعة تحليل ٩٩ إطارًا في الثانية ، يمكن أن يحسن YOLOv7-X AP بمقدار ٣.٩٪. إذا قورن YOLOv7-X بــ YOLOv5-X (r6.1) بنفس الحجم ، فإن سرعة تواصل البيانات في YOLOv7-X تكون أسرع بـ ٣١ إطارًا في الثانية. بالإضافة إلى ذلك ، من حيث كمية المعاملات والحسابات ، يقلل YOLOv7-X بنسبة ٢٢٪ من المعاملات و٨٪ من الحساب مقارنةً بـ YOLOv5-X (r6.1) ، ولكنه يحسن AP بنسبة ٢.٢٪ ([المصدر](https://arxiv.org/pdf/2207.02696.pdf)). + +## النظرة العامة + +كاشف الكائنات في الوقت الحقيقي هو جزء مهم في العديد من أنظمة رؤية الحاسوب ، بما في ذلك التتبع متعدد الكائنات والقيادة التلقائية والروبوتات وتحليل صور الأعضاء. في السنوات الأخيرة ، تركز تطوير كاشفات الكائنات في الوقت الحقيقي على تصميم هياكل فعالة وتحسين سرعة التحليل لمعالجات الكمبيوتر المركزية ومعالجات الرسومات ووحدات معالجة الأعصاب (NPUs). يدعم YOLOv7 كلاً من GPU المحمول وأجهزة الـ GPU ، من الحواف إلى السحابة. + +على عكس كاشفات الكائنات في الوقت الحقيقي التقليدية التي تركز على تحسين الهياكل ، يُقدم YOLOv7 تركيزًا على تحسين عملية التدريب. يتضمن ذلك وحدات وطرق تحسين تُصمم لتحسين دقة كشف الكائنات دون زيادة تكلفة التحليل ، وهو مفهوم يُعرف بـ "الحقيبة القابلة للتدريب للمجانيات". + +## الميزات الرئيسية + +تُقدم YOLOv7 عدة ميزات رئيسية: + +1. **إعادة تعيين نموذج المعاملات**: يقترح YOLOv7 نموذج معاملات معين مخطط له ، وهو استراتيجية قابلة للتطبيق على الطبقات في شبكات مختلفة باستخدام مفهوم مسار انتشار التدرج. + +2. **التسمية الديناميكية**: تدريب النموذج مع عدة طبقات إخراج يبرز قضية جديدة: "كيفية تعيين أهداف ديناميكية لإخراج الفروع المختلفة؟" لحل هذه المشكلة ، يقدم YOLOv7 طريقة تسمية جديدة تسمى تسمية الهدف المرشدة من الخشن إلى الدقيقة. + +3. **التحجيم الموسع والمركب**: يقترح YOLOv7 طرق "التحجيم الموسع" و "التحجيم المركب" لكاشف الكائنات في الوقت الحقيقي التي يمكن أن تستخدم بشكل فعال في المعاملات والحسابات. + +4. **الكفاءة**: يمكن للطريقة المقترحة بواسطة YOLOv7 تقليل بشكل فعال حوالي 40٪ من المعاملات و 50٪ من الحساب لكاشف الكائنات في الوقت الحقيقي الأولى من حيث الدقة والسرعة في التحليل. + +## أمثلة على الاستخدام + +في وقت كتابة هذا النص ، لا تدعم Ultralytics حاليًا نماذج YOLOv7. لذلك ، سيحتاج أي مستخدمين مهتمين باستخدام YOLOv7 إلى الرجوع مباشرة إلى مستودع YOLOv7 على GitHub للحصول على تعليمات التثبيت والاستخدام. + +وفيما يلي نظرة عامة على الخطوات النموذجية التي يمكنك اتباعها لاستخدام YOLOv7: + +1. قم بزيارة مستودع YOLOv7 على GitHub: [https://github.com/WongKinYiu/yolov7](https://github.com/WongKinYiu/yolov7). + +2. اتبع التعليمات الموجودة في ملف README لعملية التثبيت. يتضمن ذلك عادةً استنساخ المستودع ، وتثبيت التبعيات اللازمة ، وإعداد أي متغيرات بيئة ضرورية. + +3. بمجرد الانتهاء من عملية التثبيت ، يمكنك تدريب النموذج واستخدامه وفقًا لتعليمات الاستخدام الموجودة في المستودع. ينطوي ذلك عادةً على إعداد مجموعة البيانات الخاصة بك ، وتكوين معلمات النموذج ، وتدريب النموذج ، ثم استخدام النموذج المدرب لأداء كشف الكائنات. + +يرجى ملاحظة أن الخطوات المحددة قد تختلف اعتمادًا على حالة الاستخدام الخاصة بك والحالة الحالية لمستودع YOLOv7. لذا ، يُوصى بشدة بالرجوع مباشرة إلى التعليمات المقدمة في مستودع YOLOv7 على GitHub. + +نأسف على أي إزعاج قد يسببه ذلك وسنسعى لتحديث هذا المستند بأمثلة على الاستخدام لـ Ultralytics عندما يتم تنفيذ الدعم لـ YOLOv7. + +## الاقتباسات والشكر + +نود أن نشكر كتاب YOLOv7 على مساهماتهم الهامة في مجال اكتشاف الكائنات في الوقت الحقيقي: + +!!! Quote "" + + === "BibTeX" + + ```bibtex + @article{wang2022yolov7, + title={{YOLOv7}: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors}, + author={Wang, Chien-Yao and Bochkovskiy, Alexey and Liao, Hong-Yuan Mark}, + journal={arXiv preprint arXiv:2207.02696}, + year={2022} + } + ``` + +يمكن العثور على ورقة YOLOv7 الأصلية على [arXiv](https://arxiv.org/pdf/2207.02696.pdf). قدم الكتاب عملهم علنياً، ويمكن الوصول إلى قاعدة الشيفرة على [GitHub](https://github.com/WongKinYiu/yolov7). نحن نقدر جهودهم في تقدم المجال وتوفير عملهم للمجتمع بشكل عام. diff --git a/ultralytics/docs/ar/models/yolov7.md:Zone.Identifier b/ultralytics/docs/ar/models/yolov7.md:Zone.Identifier new file mode 100755 index 0000000..a45e1ac --- /dev/null +++ b/ultralytics/docs/ar/models/yolov7.md:Zone.Identifier @@ -0,0 +1,2 @@ +[ZoneTransfer] +ZoneId=3 diff --git a/ultralytics/docs/ar/models/yolov8.md b/ultralytics/docs/ar/models/yolov8.md new file mode 100755 index 0000000..7b2082f --- /dev/null +++ b/ultralytics/docs/ar/models/yolov8.md @@ -0,0 +1,166 @@ +--- +comments: true +description: استكشف الميزات المثيرة لـ YOLOv8 ، أحدث إصدار من مكتشف الكائنات الحية الخاص بنا في الوقت الحقيقي! تعرّف على العمارات المتقدمة والنماذج المدرّبة مسبقًا والتوازن المثلى بين الدقة والسرعة التي تجعل YOLOv8 الخيار المثالي لمهام الكشف عن الكائنات الخاصة بك. +keywords: YOLOv8, Ultralytics, مكتشف الكائنات الحية الخاص بنا في الوقت الحقيقي, النماذج المدرّبة مسبقًا, وثائق, الكشف عن الكائنات, سلسلة YOLO, العمارات المتقدمة, الدقة, السرعة +--- + +# YOLOv8 + +## نظرة عامة + +YOLOv8 هو التطور الأخير في سلسلة YOLO لمكتشفات الكائنات الحية الخاصة بنا في الوقت الحقيقي ، والذي يقدم أداءً متقدمًا في مجال الدقة والسرعة. بناءً على التقدمات التي تم إحرازها في إصدارات YOLO السابقة ، يقدم YOLOv8 ميزات وتحسينات جديدة تجعله الخيار المثالي لمهام الكشف عن الكائنات في مجموعة واسعة من التطبيقات. + + + +## الميزات الرئيسية + +- **العمارات المتقدمة للظهر والعنق:** يعتمد YOLOv8 على عمارات الظهر والعنق على أحدث طراز ، مما يؤدي إلى تحسين استخراج الميزات وأداء الكشف عن الكائنات. +- **Ultralytics Head بدون إثبات خطافي:** يعتمد YOLOv8 على Ultralytics Head بدون إثبات خطافي ، مما يسهم في زيادة الدقة وتوفير وقت مكشف أكثر كفاءة مقارنةً بالطرق التي تعتمد على الإثبات. +- **توازن مثالي بين الدقة والسرعة محسَّن:** بتركيزه على الحفاظ على توازن مثالي بين الدقة والسرعة ، فإن YOLOv8 مناسب لمهام الكشف عن الكائنات في الوقت الحقيقي في مجموعة متنوعة من المجالات التطبيقية. +- **تشكيلة من النماذج المدرّبة مسبقًا:** يقدم YOLOv8 مجموعة من النماذج المدرّبة مسبقًا لتلبية متطلبات المهام المختلفة ومتطلبات الأداء ، مما يجعل من السهل إيجاد النموذج المناسب لحالتك الاستخدامية الخاصة. + +## المهام والأوضاع المدعومة + +تقدم سلسلة YOLOv8 مجموعة متنوعة من النماذج ، يتم تخصيص كلًا منها للمهام المحددة في رؤية الحاسوب. تم تصميم هذه النماذج لتلبية متطلبات مختلفة ، بدءًا من الكشف عن الكائنات إلى مهام أكثر تعقيدًا مثل تقسيم الصور إلى أجزاء واكتشاف نقاط المفاتيح والتصنيف. + +تمت تحسين كل نوع من سلسلة YOLOv8 للمهام التي تخصها ، مما يضمن أداء ودقة عاليين. بالإضافة إلى ذلك ، تتوافق هذه النماذج مع أوضاع تشغيل مختلفة بما في ذلك [الاستدلال](../modes/predict.md) ، [التحقق](../modes/val.md) ، [التدريب](../modes/train.md) و [التصدير](../modes/export.md) ، مما يسهل استخدامها في مراحل مختلفة من عملية التطوير والتنفيذ. + +| النموذج | أسماء الملف | المهمة | استدلال | التحقق | التدريب | التصدير | +|-------------|----------------------------------------------------------------------------------------------------------------|----------------------------------------------|---------|--------|---------|---------| +| YOLOv8 | `yolov8n.pt` `yolov8s.pt` `yolov8m.pt` `yolov8l.pt` `yolov8x.pt` | [الكشف](../tasks/detect.md) | ✅ | ✅ | ✅ | ✅ | +| YOLOv8-seg | `yolov8n-seg.pt` `yolov8s-seg.pt` `yolov8m-seg.pt` `yolov8l-seg.pt` `yolov8x-seg.pt` | [تقسيم الصور إلى أجزاء](../tasks/segment.md) | ✅ | ✅ | ✅ | ✅ | +| YOLOv8-pose | `yolov8n-pose.pt` `yolov8s-pose.pt` `yolov8m-pose.pt` `yolov8l-pose.pt` `yolov8x-pose.pt` `yolov8x-pose-p6.pt` | [المواقق/نقاط المفاتيح](../tasks/pose.md) | ✅ | ✅ | ✅ | ✅ | +| YOLOv8-cls | `yolov8n-cls.pt` `yolov8s-cls.pt` `yolov8m-cls.pt` `yolov8l-cls.pt` `yolov8x-cls.pt` | [التصنيف](../tasks/classify.md) | ✅ | ✅ | ✅ | ✅ | + +توفر هذه الجدولة نظرة عامة على متغيرات نموذج YOLOv8 ، مما يسلط الضوء على قابليتها للتطبيق في مهام محددة وتوافقها مع أوضاع تشغيل مختلفة مثل الاستدلال والتحقق والتدريب والتصدير. يعرض مرونة وقوة سلسلة YOLOv8 ، مما يجعلها مناسبة لمجموعة متنوعة من التطبيقات في رؤية الحاسوب. + +## مقاييس الأداء + +!!! الأداء + + === "الكشف (COCO)" + + انظر إلى [وثائق الكشف](https://docs.ultralytics.com/tasks/detect/) لأمثلة عن الاستخدام مع هذه النماذج المدربة مسبقًا على [COCO](https://docs.ultralytics.com/datasets/detect/coco/) ، التي تضم 80 فئة مدربة مسبقًا. + + | النموذج | حجم

(بيكسل) | معدل الكشفالتحقق

50-95 | سرعة

CPU ONNX

(متوسط) | سرعة

A100 TensorRT

(متوسط) | معلمات

(مليون) | FLOPs

(مليون) | + | ------------------------------------------------------------------------------------ | --------------------- | -------------------- | ------------------------------ | ----------------------------------- | ------------------ | ----------------- | + | [YOLOv8n](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n.pt) | 640 | 37.3 | 80.4 | 0.99 | 3.2 | 8.7 | + | [YOLOv8s](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8s.pt) | 640 | 44.9 | 128.4 | 1.20 | 11.2 | 28.6 | + | [YOLOv8m](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8m.pt) | 640 | 50.2 | 234.7 | 1.83 | 25.9 | 78.9 | + | [YOLOv8l](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8l.pt) | 640 | 52.9 | 375.2 | 2.39 | 43.7 | 165.2 | + | [YOLOv8x](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x.pt) | 640 | 53.9 | 479.1 | 3.53 | 68.2 | 257.8 | + + === "الكشف (صور مفتوحة V7)" + + انظر إلى [وثائق الكشف](https://docs.ultralytics.com/tasks/detect/) لأمثلة عن الاستخدام مع هذه النماذج المدربة مسبقًا على [Open Image V7](https://docs.ultralytics.com/datasets/detect/open-images-v7/)، والتي تضم 600 فئة مدربة مسبقًا. + + | النموذج | حجم

(بيكسل) | معدل الكشفالتحقق

50-95 | سرعة

CPU ONNX

(متوسط) | سرعة

A100 TensorRT

(متوسط) | معلمات

(مليون) | FLOPs

(مليون) | + | ----------------------------------------------------------------------------------------- | --------------------- | -------------------- | ------------------------------ | ----------------------------------- | ------------------ | ----------------- | + | [YOLOv8n](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n-oiv7.pt) | 640 | 18.4 | 142.4 | 1.21 | 3.5 | 10.5 | + | [YOLOv8s](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8s-oiv7.pt) | 640 | 27.7 | 183.1 | 1.40 | 11.4 | 29.7 | + | [YOLOv8m](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8m-oiv7.pt) | 640 | 33.6 | 408.5 | 2.26 | 26.2 | 80.6 | + | [YOLOv8l](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8l-oiv7.pt) | 640 | 34.9 | 596.9 | 2.43 | 44.1 | 167.4 | + | [YOLOv8x](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x-oiv7.pt) | 640 | 36.3 | 860.6 | 3.56 | 68.7 | 260.6 | + + === "تقسيم الصور إلى أجزاء (COCO)" + + انظر إلى [وثائق التقسيم](https://docs.ultralytics.com/tasks/segment/) لأمثلة عن الاستخدام مع هذه النماذج المدرّبة مسبقًا على [COCO](https://docs.ultralytics.com/datasets/segment/coco/)، والتي تضم 80 فئة مدربة مسبقًا. + + | النموذج | حجم

(بيكسل) | معدل التقسيمالتحقق

50-95 | معدل التقسيمالأقنعة

50-95 | سرعة

CPU ONNX

(متوسط) | سرعة

A100 TensorRT

(متوسط) | معلمات

(مليون) | FLOPs

(مليون) | + | -------------------------------------------------------------------------------------------- | --------------------- | -------------------- | -------------------- | ------------------------------ | ----------------------------------- | ------------------ | ----------------- | + | [YOLOv8n-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n-seg.pt) | 640 | 36.7 | 30.5 | 96.1 | 1.21 | 3.4 | 12.6 | + | [YOLOv8s-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8s-seg.pt) | 640 | 44.6 | 36.8 | 155.7 | 1.47 | 11.8 | 42.6 | + | [YOLOv8m-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8m-seg.pt) | 640 | 49.9 | 40.8 | 317.0 | 2.18 | 27.3 | 110.2 | + | [YOLOv8l-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8l-seg.pt) | 640 | 52.3 | 42.6 | 572.4 | 2.79 | 46.0 | 220.5 | + | [YOLOv8x-seg](https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x-seg.pt) | 640 | 53.4 | 43.4 | 712.1 | 4.02 | 71.8 | 344.1 | + + === "التصنيف (ImageNet)" + + انظر إلى [وثائق التصنيف](https://docs.ultralytics.com/tasks/classify/) لأمثلة عن الاستخدام مع هذه النماذج المدرّبة مسبقًا على [ImageNet](https://docs.ultralytics.com/datasets/classify/imagenet/)، والتي تضم 1000 فئة مدربة مسبقًا. + + | النموذج | حجم

(بيكسل) | دقة أعلى

أعلى 1 | دقة أعلى

أعلى 5 | سرعة

CPU ONNX

(متوسط) | سرعة

A100 TensorRT

(متوسط) | معلمات

(مليون) | FLOPs